Want a free year of Arize’s paid plan? Join Aakash’s Bundle. 1 annual subscription to the newsletter gets you a paid year of Arize free ($1260 value).

Check it out on Apple, Spotify, or YouTube.

Brought to you by:

Miro: The innovation workspace is your team’s new canvas

Jira Product Discovery: Plan with purpose, ship with confidence

Maven: Get $100 off Aman’s course with my code ‘AAKASHxMAVEN’

Amplitude: Test out the #1 product analytics and replay tool in the market

Today’s Episode

Every PM has to build AI features these days.

And with that means a completely new skill set:

AI prototyping

Observability, Akin to Telemetry

AI Evals: The New PRD for AI PMs

RAG v Fine-Tuning v Prompt Engineering

Working with AI Engineers

So, in today’s episode, I bring you a 2-hour crash course into becoming a better AI PM:

I’ve teamed up with Aman Khan.

When it comes to people creating AI PM content, Aman Khan is amongst the most insightful and informed. And that's because he's been an AI PM since 2019:

He worked at Cruise on self-driving cars.

He's worked with Spotify on their AI systems.

And now he works at Arize, one of the leading observability and evals companies.

Here’s what you’ll learn if you watch the whole thing:

1. AI Prototyping Best Practices in June 2025

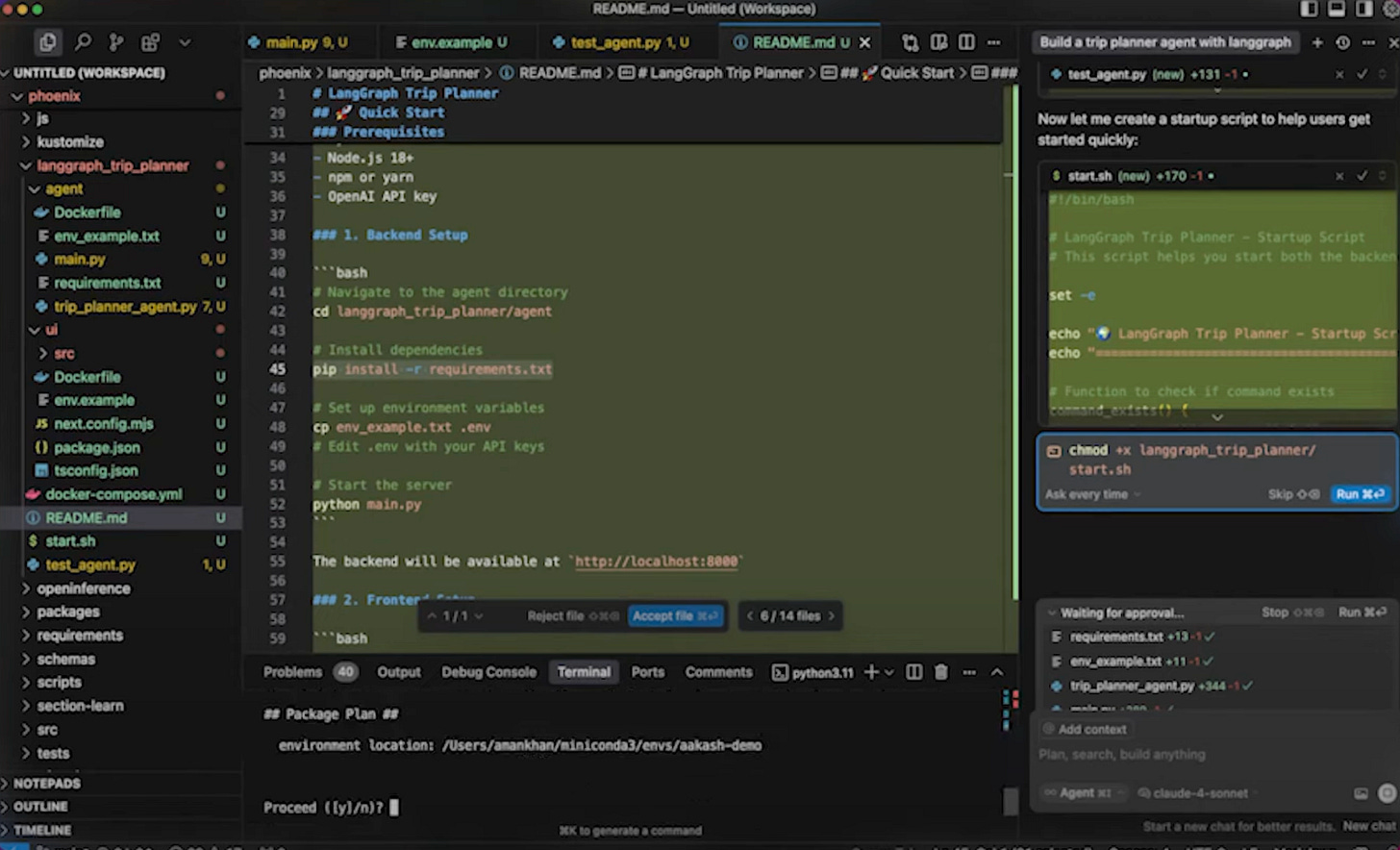

Aman has been using all the tools - from Lovable, Bolt, v0 and Replit to Cursor and Windsurf. His pick as the best tool for AI PMs? Cursor.

And here's why: PMs have to get into the code. While tools like Bolt and Lovable are perfect for quick mockups, when it comes to building evals and the like, you want access to a code-based agent.

Cursor is going to be slower to get to that first element. But it will get there simply via text, and what you get will be more usable for learning and in your work after.

2. How to Add Observability

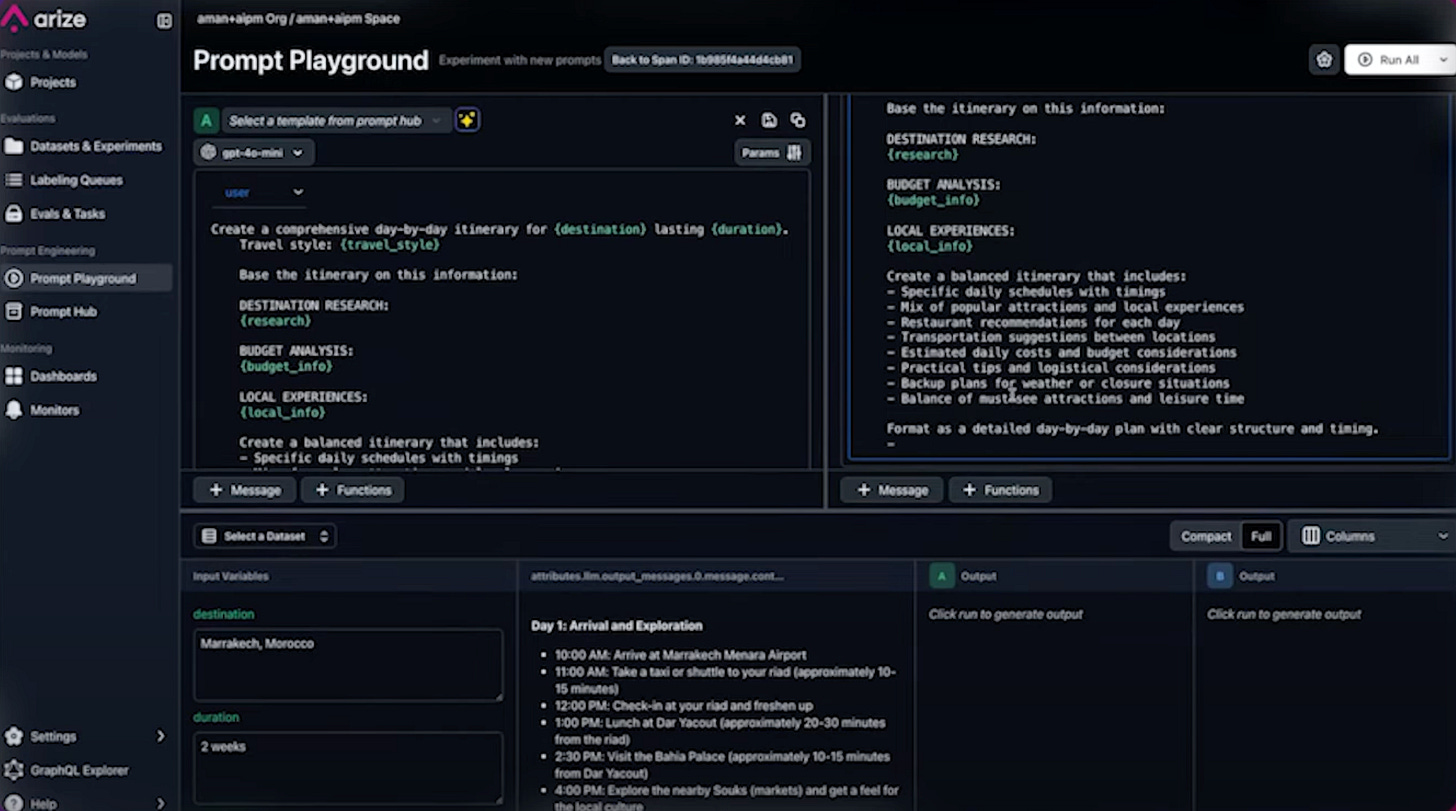

Once you have an AI product, you can't just jump to evals. Just like regular products need telemetry for analytics, AI products need traces for evals.

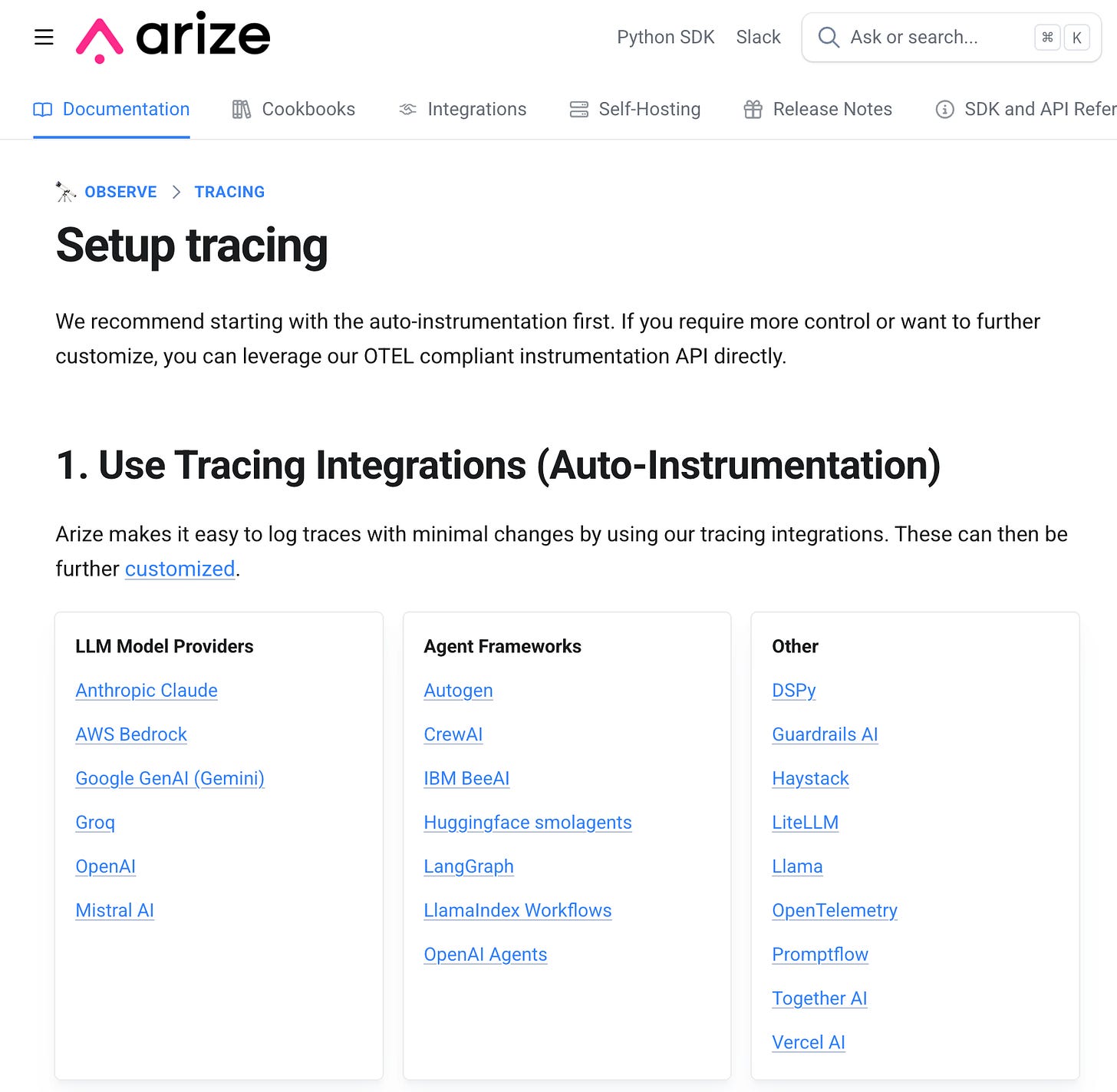

You just build off a template (like Phoenix) or point Cursor to an observability tool's documentation, and it will add what you need to see what's going on (we used Arize).

The implementation is literally adding decorators to your functions - a few lines of code that wrap your AI calls and create visual traces.

What you get is a flowchart showing exactly which agents fired, what tools they used, and how long each step took. Suddenly you can A/B test different models and prompts with real data instead of gut feelings.

3. Evals: From Vibe Coding to Thrive Coding

Up to this point, everything is "vibe coding" - looking at outputs and deciding if they feel good or bad. But that doesn't scale.

The solution is building LLM-as-judge systems using simple templates: give the AI a role, provide context, define what good looks like, then ask for a label.

Use text labels like "friendly vs robotic" instead of numbers - LLMs understand language better than numerical scales.

The meta-insight: you need evals to check your evals. When your judge marks outputs as "friendly" while your human labels say "robotic," that 0% match rate tells you exactly where to improve your evaluation system.

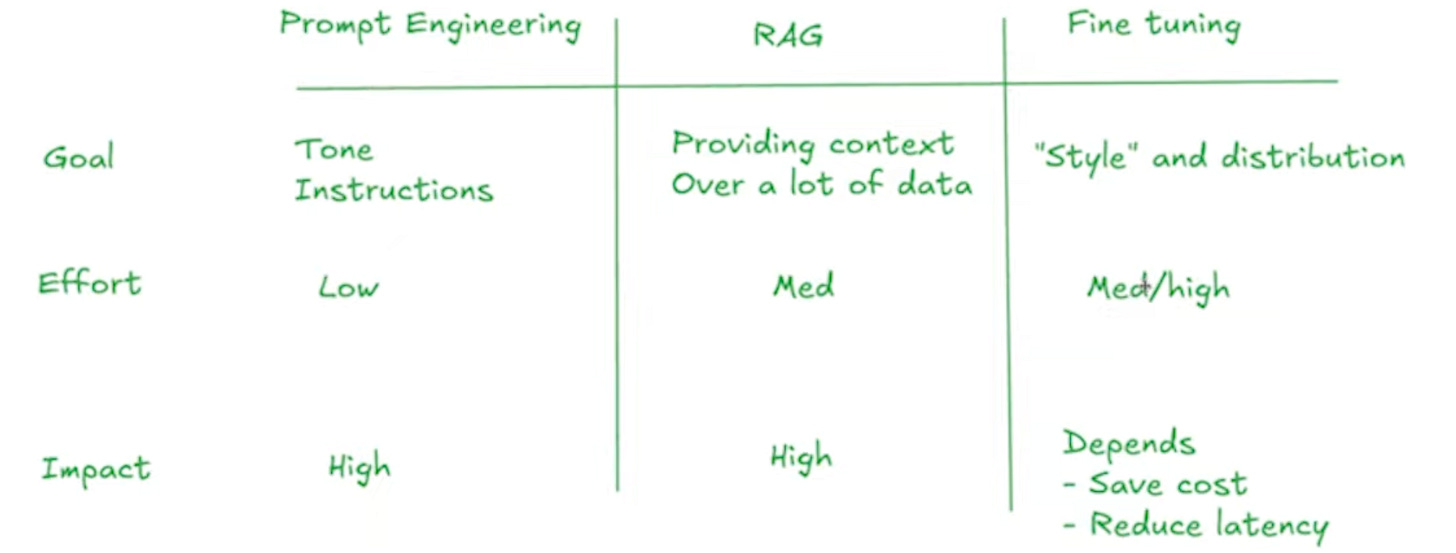

4. Prompt Engineering vs RAG vs Fine-Tuning

Most PMs fine-tune when they should just prompt better. Aman shows a side-by-side where a well-structured prompt outperforms a fine-tuned model on the same task.

His framework: Start with prompting (gets you 95% of results), add RAG when you need external data, only fine-tune for cost or speed optimization. Change your trip planner's tone and add discount offers just by editing the prompt - faster output, better user experience, zero additional infrastructure.

5. Working with AI Engineers

Your role is changing. AI engineers don't want Google Docs with requirements. They want you labeling data and defining what "good" looks like through evals.

Think of evals as your new PRDs. Instead of writing user stories, you're providing labeled datasets and saying "improve this metric." You become the voice of the end user in a world where success gets measured by eval scores, not feature completeness.

If you remember one thing…

AI PM isn't magic - it's understanding how to combine prompting, reasoning, and RAG into systems that work.

So use this information to get ahead of everyone else.

Key Takeaways

And don't miss Aman's story of how he transitioned from traditional PM to AI PM at Cruise:

Related

Newsletters:

Podcasts:

Where to Find Aman

LinkedIn: Aman Khan

X: Aman Khan

Substack: aiproductplaybook.com

Company: Arize

Course: The AI PM Playbook

If you prefer to only get newsletter emails, unsubscribe from podcast emails here.

If you want to advertise, email productgrowthppp at gmail.

Up Next

I hope you enjoyed the last episode with Tom Occhino (where we gave an in-depth v0 tutorial). Up next, we have episodes with:

Dan Olsen - Author, Lean Product Playbook

John Beckmann - Head of Events + Webinars, Zoom

Tanguy Crusson - Head of Product, Jira Product Discovery

Finally, check out my latest deep dive if you haven’t yet: The Playbook to Land Your First PM Job.

Cheers,

Aakash

P.S. More than 85% of you aren't subscribed yet. If you can subscribe on YouTube, follow on Apple & Spotify, my commitment to you is that we'll continue making this content better and better.