Check out the conversation on Apple, Spotify and YouTube.

Brought to you by:

Today’s Episode

In today’s episode, we have one of the two voices I wanted most when I started this podcast: Teresa Torres. Alongside Marty Cagan (we already had him), she was in my top guests to have.

That’s because she has trained over 17,000 PMs in 100 countries.

And in today’s episode, she’s breaking down one of the most important elements of PMing: discovery.

She gives a masterclass on how to use the learnings from her smash hit book Continuous Discovery Habits for the AI age, covering both:

How to do discovery for non-AI features with AI tools

How to discovery for AI features

If you’ve ever wondered why your product ideas sometimes flop, even when the interviews and research looked promising… you’re about to find out why!

Your Newsletter Subscriber Bonus:

For subscribers, each episode I also write up a newsletter version of the podcast. Thank you for having me in your inbox.

(By the way, we’ve launched our podcast clips channel as well and we’re going to post most valuable podcast moments on this channel, so don’t miss out: subscribe here.)

The Journey Ahead

How to Discovery for Non-AI Features in the AI Age

a. Validate Before You Build

b. Continuous Discovery Habits

c. Opportunity Solution Tree

d. How to Interview Well

e. How to Document Interviews

f. How to Synthesize Interviews

g. How to Use AI Prototyping Correctly

h. How Discovery Drives the Roadmap

How to Do Discovery for AI Features

a. The 5 Key Steps of Discovery

b. How to do Error Analysis

1. How to do Discovery for All Features (AI or Non-AI) in the AI Age

1a. Validate Before You Build

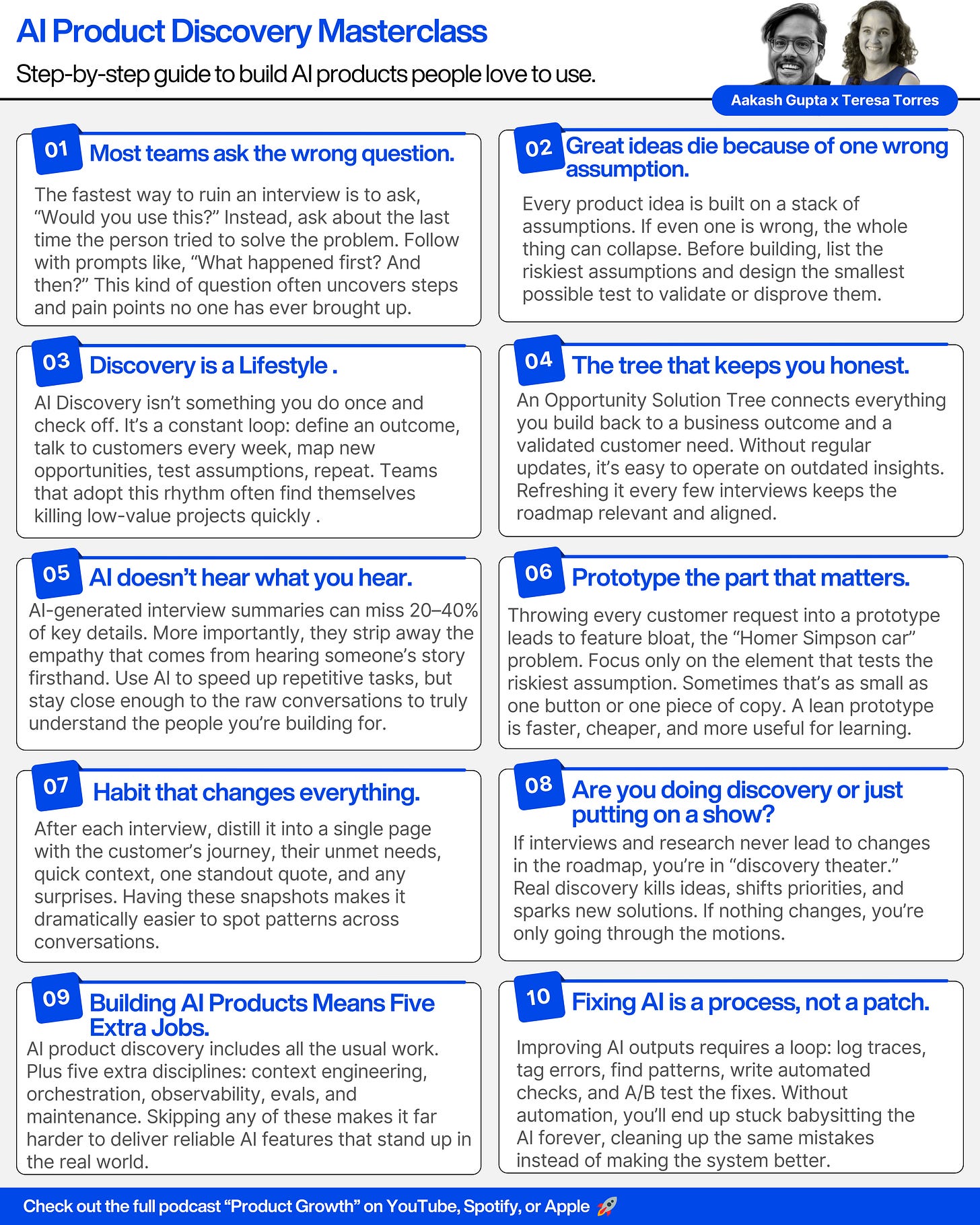

Every idea is a stack of assumptions. Building the full solution before you test those assumptions wastes time if any core belief is false.

Teresa’s rule: test assumptions early and cheaply.

How to do it in practice:

Break the idea into discrete assumptions (value, discoverability, behavior change, technical feasibility, trust, etc.).

Rank assumptions by risk (which one failing would kill the idea?).

Design the smallest experiment that could disprove an assumption, quick usability session, a short survey, a manual or “concierge” version, a lightweight prototype of the specific element. Teresa emphasizes inexpensive tests rather than polishing full features.

If the test disproves the assumption, stop or pivot. If it supports the assumption, move to the next riskiest belief.

1b. Continuous Discovery Habits

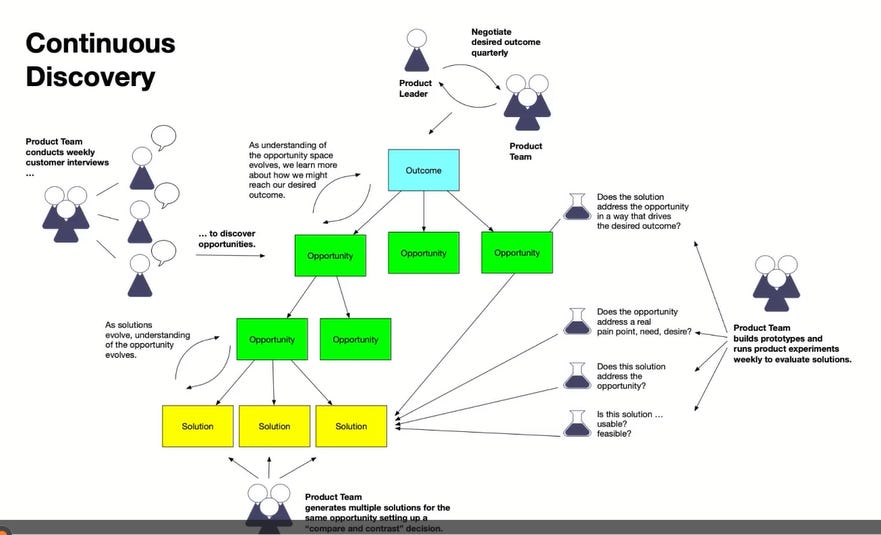

The heart of Teresa’s method is what she calls Continuous Discovery Habits.

Discovery shouldn’t be something you do once at the start of a project.

It should be a weekly rhythm. That means starting with a clear, measurable outcome.

Running interviews every week, mapping the insights you collect into your opportunity space, picking one opportunity to focus on, brainstorming multiple possible solutions, and running small assumption tests before committing.

This loop keeps you close to your customers, prevents outdated assumptions from lingering, and ensures the roadmap is shaped by evidence, not guesswork.

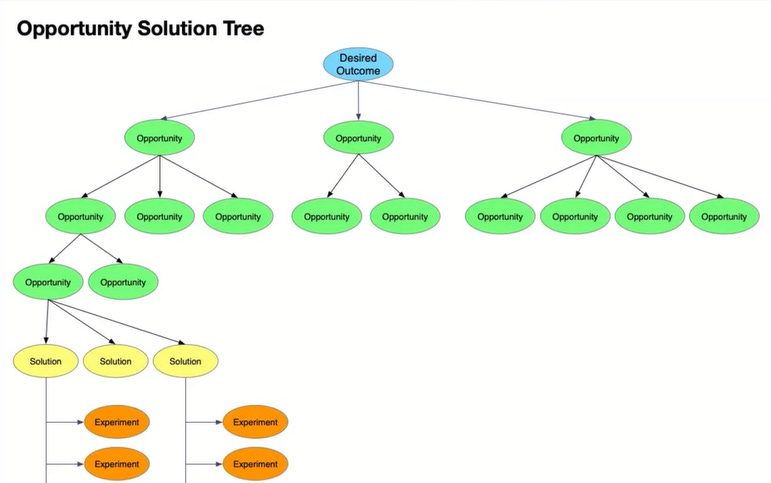

1c. Opportunity Solution Trees

A central tool in this process is the Opportunity Solution Tree, or OST.

Think of it as a living map that connects your business outcome at the top, to the opportunities (unmet needs) you’ve uncovered in the middle, and then to the solutions you might try at the bottom.

The OST forces you to focus on the problem space before jumping to solutions and helps you see if you’re exploring multiple ways to solve the same problem or getting stuck on just one idea.

1d. How to Interview Well

Most teams fall into the trap of asking “Would you use X?” or “How much would you pay?” and people politely give answers they think you want to hear.

The problem is that these are guesses about the future, not reflections of actual behavior.

Teresa’s approach is to ask about the last time the person tried to solve the problem, then use temporal prompts like:

“What happened first?”

“What happened next?”

To get a detailed, chronological account.

This reveals real obstacles, workarounds, and context you’d never hear in a hypothetical conversation.

Technique: Excavate the Story in Sequence

She calls the method “excavate the story.” It’s a disciplined interview technique that forces chronological detail and behavioral evidence.

Start with one request: “Tell me about the last time…” and follow the sequence: “What happened first? Then what happened?”

Ask for specific artifacts and steps: “Show me the email, the screen, or the tool you used” (when possible).

Capture a salient quote in the customer’s words — one line that captures emotion or motivation.

After the interview, produce a single-interview snapshot (next point).

Why does it improve synthesis?

Chronological accounts make it easy to map experience flows and spot where friction occurs.

Quotes and concrete artifacts keep the insight grounded in the user’s reality.

1e. How to Document Interviews

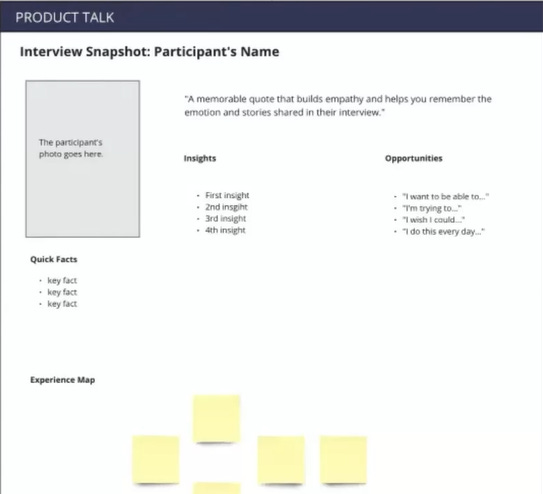

What it contains (the template she uses)

Experience map: the key moments in their story (step by step).

Opportunities: the unmet needs or pain points that surfaced.

Quick facts: segment info and contextual details that matter.

Salient quote: one memorable line in the user’s own words.

Miscellaneous insights: surprises, follow-ups, or anything to flag.

Why it’s essential:

Prevents drowning in raw transcripts. You’ll build a library of snapshots that are quick to scan and easy to cluster.

Snapshots make cross-interview synthesis fast and repeatable.

How to use snapshots:

Store them in a shared space. Review 3–4 at a time when updating the OST. Look for overlapping opportunities and repeated quotes that reveal patterns.

Quick action: after your next interview, create one snapshot and file it where the team can read it in under two minutes.

1f. How to Synthesize Interviews

How to synthesize properly:

Read snapshots in small batches (3–4). Identify recurring problems, patterns in behavior, or repeated language. Group those into opportunity clusters.

Translate clusters into the OST as opportunities. Each opportunity should be supported by at least one or two snapshots (evidence).

For each opportunity, list multiple solution ideas and the assumptions you’d need to test.

Teresa recommends integrating synthesis into your weekly rhythm…

Updating the OST after a few interviews so your map stays evidence-based rather than aspirational.

How to Use AI in Synthesis

AI can speed up laborious parts of discovery: synthesizing notes, drafting summaries, prototyping small elements, and generating test ideas.

It’s a thought partner that extends your capacity.

Relying on AI for synthesis without human review loses context and empathy.

Teresa warns that AI summaries can miss 20–40% of important detail. If you pull humans out of the loop, you also remove the learning that comes from listening to customers.

Teresa’s stance:

Use AI to augment your workflow, not replace it. Let AI do drafts and repetitive tasks, but keep humans in the loop to validate, correct, and preserve empathy.

Always spot-check AI summaries against raw interviews or snapshots

1g. How to use AI Prototyping Correctly

When it comes to prototyping, AI is also a powerful tool but only if you use it after you’ve identified the opportunity space and brainstormed solutions.

Watch out for the the “Homer Simpson car” problem, cramming in every feature someone mentions until the product is bloated and unfocused.

The better approach is to prototype only the specific element that tests your riskiest assumption, rather than building an entire polished feature just because you can.

1h. Translating Discovery into the Roadmap

What “discovery theater” looks like:

Interviews are conducted, notes are taken, but the backlog and roadmap don’t change. No ideas are killed; the original plan gets built regardless.

Why do teams fall into it?

Organizational pressure to deliver features.

Misalignment on what discovery should influence.

Treating discovery as a checkbox rather than a decision driver.

Your pathway:

Make discovery outputs (snapshots, OST updates, test results) part of decision points. If your research doesn’t change a decision, ask why you did it.

Be realistic about scope, discovery won’t always overturn big strategic constraints, but it should inform what you build within those constraints.

2. How to Do Discovery for AI features

2a. The 5 Key Steps

She breaks AI product discovery into five areas.

Each one is a distinct discipline you must treat explicitly.

Context engineering: Figure out what the model needs to see to produce useful outputs (what to feed the model, in what format, what background/context tokens matter).

Orchestration: Design how you will sequence model calls and combine outputs (breaking tasks into multiple LLM calls, deciding fallback behavior).

Observability: Build logging and tracing so you can see model inputs, outputs, and decision paths.

Evals (quality checks): Write automated tests that detect recurring errors and measure quality over time.

Maintenance: Plan for ongoing updates as models and data drift (model updates, prompt changes, new edge cases).

These are all critical areas in building AI features to inform with discovery work.

2b. The Error Analysis Loop and How to Catch and Fix AI Errors Reliably

Here’s her process to do discovery for AI quality:

Log traces: record inputs and outputs for requests you care about.

Human review & tagging: sample traces and have humans label errors and categorize them.

Identify common error classes: group similar failures together so you can target fixes.

Write evals: create automated checks that flag those error classes programmatically.

A/B test fixes: deploy changes and measure whether the evals (and business metrics) improve.

If you want to hear her take on what it takes to be a real PM, and how much she makes in her business, you’ll have to listen to the episode on Apple, Spotify and YouTube!

Key Takeaways

Where to find Teresa

If you prefer to only get newsletter emails, unsubscribe from podcast emails here.

If you want to advertise, email productgrowthppp at gmail.

Related

Podcasts:

Newsletters:

P.S. More than 85% of you aren't subscribed yet. If you can subscribe on YouTube, follow on Apple & Spotify, my commitment to you is that we'll continue making this content better.