The AI PM's Playbook: How Top Product Managers Are 10x-ing Their Impact in 2025

We've all been sold a million ways to use AI as a PM. Let's cut through the fluff with the top use cases, tools, and rules to improve your productivity at work without killing your reputation.

It’s the thing we all keep hearing about AI…

“All PMs need to become AI PMs.”

For my money, it’s a true statement. But with some caveats.

Let me explain.

The 3 Types of AI PMs

What do we mean by ‘AI PM’?

Actually there are three types of AI PMs:

AI-powered PM: This is every single PM, who will need to use AI to “work unfairly in this unfair job.”

AI PM: These are the specialists building core AI products at companies like OpenAI and Anthropic.

AI feature PM: These are PMs adding AI features to existing products, eg Notion’s AI writing assistance, Miro’s smart canvas

Not every PM will be type 2 or type 3 AI PMs.

But every PM needs to become type 1 - AI-powered. That’s the subject of today’s piece.

Today’s deep dive is completely free for all thanks to Userpilot - the all-in-one Product Growth platform, with analytics, session recording, in-app engagement & user feedback.

Watch this space for my upcoming interview with them on February 19th at 11 AM EST.

Today’s Deep Dive

I talked to 10+ folks on how they use AI as PMs. Here’s a concise, to the point guide to take you from “Just ChatGPT” to “AI-first” as a Product Manager (PM):

The 3 Rules to Using AI Right

Top 5 AI PM Use Cases

Common Mistakes

1. The 3 Rules to Using AI right

We’ve all been bombarbed with information about how to use AI. So I wanted to simplify it to the absolute core first principles.

And I’ve boiled them down to three key rules you must remember when using AI as a PM:

Rule 1 - Prompt skill is everything

Each tool has its own nuances for prompting. But generally, there’s a huge skill curve in prompting.

Just like everyone assumes they’re an above-average driver, everyone tends to assume they’re an above-average prompter.

In actuality, you probably have a long way to go as a prompter if you haven’t been practicing.

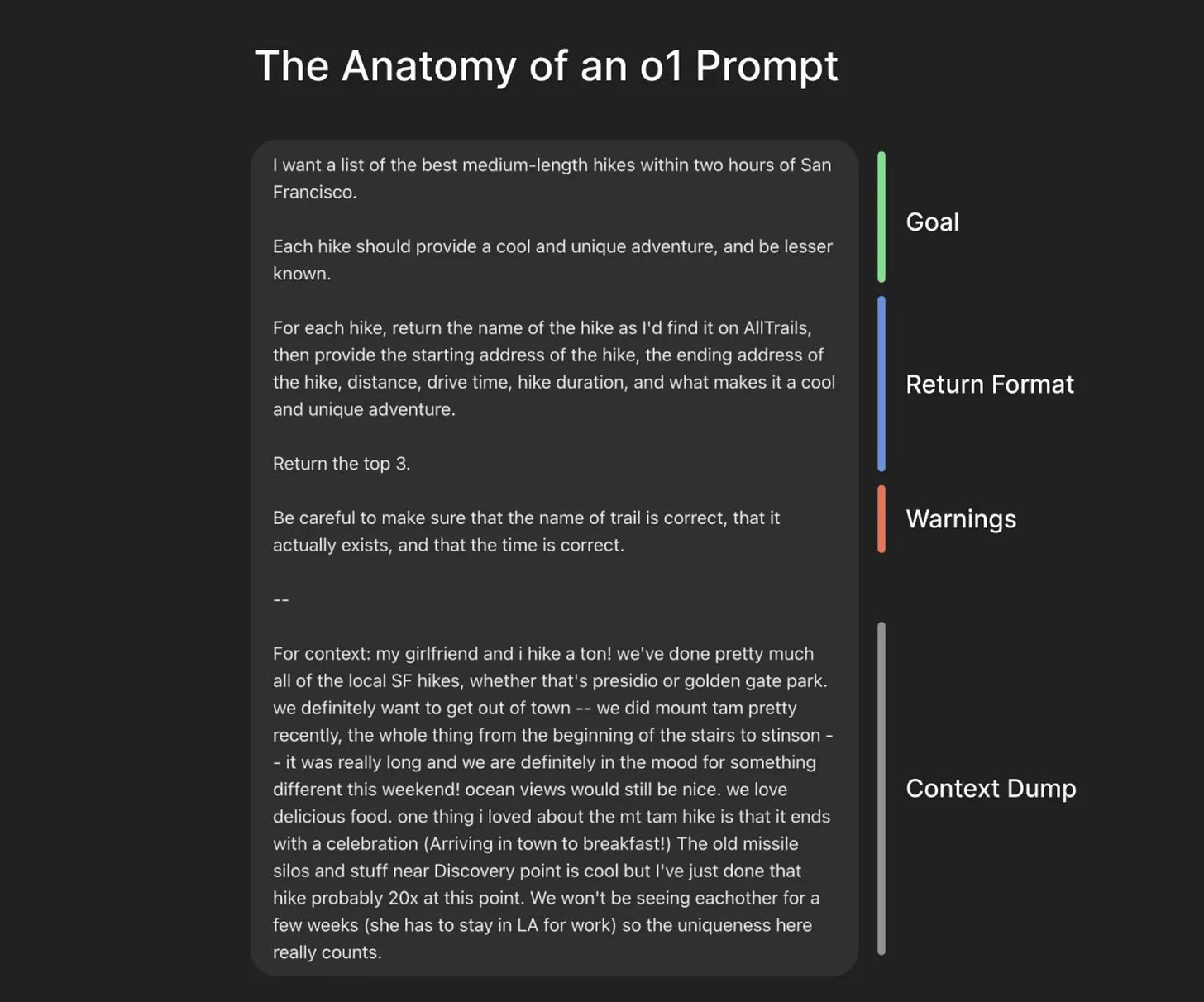

If you’re using ChatGPT o1 for instance, the optimal prompt structure looks like this from Dan Mac and retweeted by OpenAI’s President Greg Brockman:

Rule 2 - 20-60-20

You need to do the first 20% of the work in any PM text because the truth is: Claude doesn’t have access to your context as a PM.

So you need to brain-dump the relevant information into it, as the picture above alludes to.

You also need to do the last 20% of the work. That’s where you remove the traces of AI in the text. And you give it your additional unique human context.

That’s why the company has hired you after all! And not just created an AI agent.

Rule 3 - Revise till you get what you want

It’s rarely the case that the first output you get from an AI is exactly what you want. You generally want to iterate with it.

Provide very clear and specific feedback that it can easily incorporate. It’s usually in drafts 4-5 of a written output that things can be used.

The best PM I know treats AI like user research – multiple rounds of refinement. Her process:

Draft 1: Basic prompt

Draft 2: Add specific examples

Draft 3: Incorporate stakeholder constraints

Draft 4-5: Fine-tune tone and details

Now let’s get into the specifics…

2. Top 5 AI PM use cases

We are all getting sold a million use cases to use AI as an AI-powered PM.

For my money, these are the 5 that really matter:

Use Case 1 - PRDs

Remember the classic PM nightmare? It's 4 PM, your VP needs a comprehensive PRD by tomorrow, and you're staring at a blank document.

Last month, I shadowed a PM at a FAANG company working on a new feature spec. Their first AI prompt was beautiful – but completely wrong for their use case.

This is the game-changing framework mega-prompt they developed:

Context setting (20%): "You're helping scope a feature for a B2B SaaS product with enterprise security requirements..."

Constraints (30%): "Must comply with SOC2, handle offline scenarios..."

Examples (30%): "Here's our last successful spec..."

Output format (20%): "Structure this like our standard PRD template..."

So they spent 20% of the time developing that context, then took the 80% ready document and spent 30 minutes upgrading it.

I’ve tried all the LLMs, and right now, the top three are:

Claude

ChatPRD

ChatGPT

And it’s in that order. Claude is just insanely good right now. (But that could change tomorrow.)

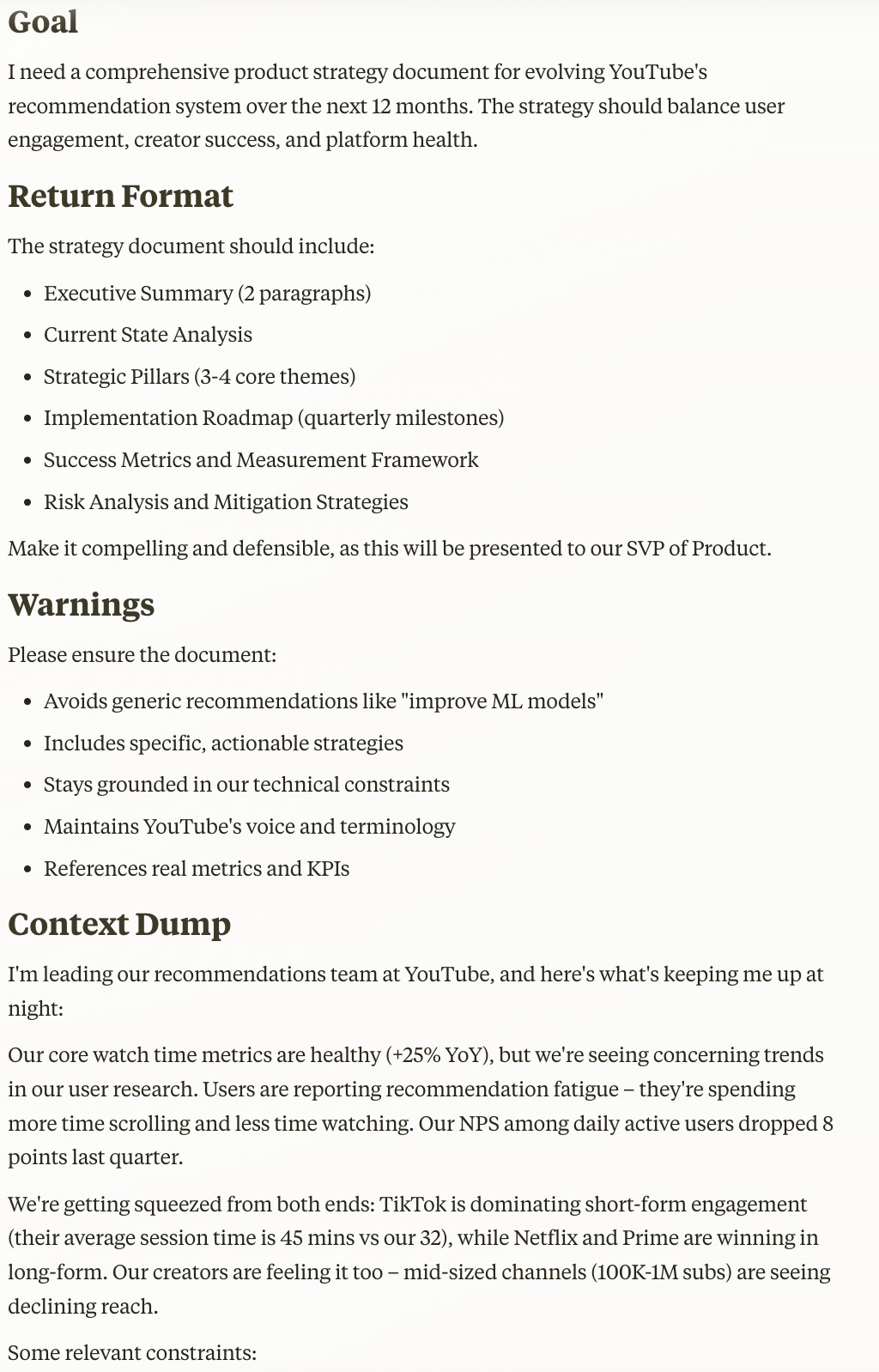

Use Case 2 - Strategy docs

Product strategy is one of the most important jobs for PMs.

But, a lot of times, your strategy document is more about the research and work that you do before it.

When it comes time to actually writing a hard-hitting doc, the highest returns aren’t always in writing it beautifully.

That’s where a tool like Claude comes in. If you give it all the right thinking and core principles, it can come back with a well-defended articulation of them.

The prompt structure that works best here is to overwhelm with context, where you have 5-6 points of key context so that Claude doesn’t misunderstand your situation. Something like this:

Some of the key inputs to consider and add in the context dump include:

- Last quarter's experiment results: [data]

- Customer interview insights: [findings]

- Technical architecture limitations: [specifics]

- Competitor moves: [analysis]

- Team capacity: [details]

- Well received strategy doc: [whole doc]There aren’t many software product strategies in its training data, so feeding it a great one from your company helps.

Use Case 3 - Competitive research

We’ve all been there: a new competitor comes out of nowhere and shakes up the market. You know your bosses are going to be looking for your take on it.

Perplexity can vastly speed up your time to insights. It’s like Google search enhanced.

Here’s how you can do a competitive research with it:

Feed it that new competitor.

Ask for feature comparisons, pricing strategies, and user sentiment.

Let it dig through recent updates you might have missed.

Here’s an example of a report it created for a hypothetical PM at Lemlist about Instantly.

AI might not be able to go through everything you need to as a PM, but it makes a 3 hour exercise - writing up some thoughts to your boss about a new competitor - more like 30 minutes.

AI can do most of the fact finding and point you to links with more info. You can add the thinking on top.

Use Case 4 - Meeting updates

There’s so many meetings that AI can transcribe and create the next steps for you.

Normally after product reviews, every PM dutifully spends 30 minutes doing this.

With an AI note-taking tool, it can be done 90% for you.

You make a few edits, and send it out.

After testing every tool out there, right now I believe Otter.Ai is the best at generating the right next steps and notes right now.

But you can use the one packaged with your meeting tool (eg, Zoom AI) if your company pays for it.

Just actually remember to! You’d be surprised how many people I talk to who don’t.

It’s also an option to paste your transcript into ChatGPT, Gemini, or Claude and ask them to write an update from your perspective to the team with next steps and key takeaways.

Use Case 5 - Scaling prototyping and design iteration

Last week, I saw a PM go from an idea to a clickable prototype in under an hour. Their secret? Claude Artifacts for wireframes.

You can also use tools like Bolt, Cursor, Lovable, and Replit:

The game-changer isn't just speed - it's iteration. When stakeholders want changes, you're not redoing hours of work. You're tweaking a prompt and generating new versions in minutes.

Real talk: The first versions won't win design awards. But for early feedback and concept validation? Pure gold.

Summary of Tools Recommended

Long Tail of Use Cases

I’ve talked a lot in the past about other use cases. These are also good ways to use AI, but I find the top 5 to be:

The top one’s to master your prompting skill

The one’s to build your mental habits to bring AI into the process

But, anyways, here is a long-tail of the next 10 best use cases to consider. I’ve written about them and have linked where:

3. Most Common Mistakes Using AI as a PM

I've made them all, so you don't have to.

Here are the face-palm moments every AI PM needs to avoid:

Mistake 1 - Letting AI think instead of write

Last month, a startup Head of Product shared their AI-generated strategy doc with me.

Clean structure.

Perfect frameworks.

Missing literally everything that mattered.

The strategy read like a McKinsey deck from 2019. Zero mention of their recent enterprise pivot. Complete silence on their failed PLG motion.

And most painfully - it recommended features their top customers had explicitly rejected last quarter.

Here's the truth: AI can structure thoughts beautifully. But it can't understand why your last three experiments failed.

Or why your CEO gets nervous every time someone mentions consumption pricing.

Or which competitor moves actually matter to your specific market position.

Your job is to feed it context, guide its output, and enhance it with the insights only you can provide.

AI should amplify your thinking, not replace it.

Mistake 2 - Forgetting to remove the AI

Two weeks ago, a PM friend sent me their board deck for feedback.

Five minutes in, I spotted the telltale signs of unedited AI work.

Market sizing from 2021.

Competitor analysis that missed three major acquisitions.

Feature recommendations that ignored their core technical constraints.

This wasn't a failure of AI - it was a failure to treat AI output as a first draft.

When using AI for analysis, you need to shape that output with current market knowledge and hard-earned insights.

I've learned to give every AI output a rapid reality check: Are these numbers current? Has the competitive landscape shifted? Does this align with what we know about our users?

Mistake 3 - Outdated Tools

At a product offsite recently, I watched five PMs debate their AI stack for 45 minutes.

Each swore by a different tool. None had actually tested alternatives in the last three months.

The AI landscape evolves weekly. That perfect workflow you built around ChatGPT-3.5? There might be three better options now.

But here's the key: You don't need to chase every shiny new AI tool.

Build a quick testing framework. Spend one hour monthly trying new tools. Ask other PMs what's actually working. Focus on solving real problems, not collecting AI badges.

After a year of watching PMs navigate these waters, I've learned that becoming an AI-powered PM isn't about mastering tools.

It's about knowing when to lean on AI and when to trust your hard-earned product instincts.

Final Words

Every PM I know who's crushing it with AI started by failing fast and learning faster.

Develop your own style. But don’t stop trying the latest tools.

Measure your skill level across all 5 use cases, and keep improving. You got this!

Additional Resources

I’ve written bunches more about this topic at varying levels. Here’s some pieces you may like:

What else do you want to know about using AI as a techie? Reply to this e-mail.

Up Next

I hope you enjoyed the latest podcasts with Chloe Shih and Bilawal Sidhu. Up next, we have Adam Robinson, Sergio Pereira, and Pierre Herubel. We also have Marily Nika on AI PMing in the beginning of February.

And in the newsletter, look forward to:

European Market Deep Dive

How Jira Product Discovery Grows

Rock the Product Design Interview

Talk soon,

Aakash