Gemini 3 isn't just the top model, it's rewriting AI infrastructure: AI Update #4

I had early access to Gemini and here's what I learned: the model isn't just the best, it's rewriting the economics of AI

Welcome back to the AI Update.

Google released Gemini 3 Tuesday, and the new Nano Banana Pro this morning. I got early access to both, and have been thinking deeply about the implications.

Here’s where I land: This week is one of those moments that changes everything. And most of the commentary is focused on the wrong thing:

Gemini 3 is shocking because of the benchmarks. Now, Google has the top model (something Polymarket had been predicting all year but only just happened now).

But it’s even more shocking because Google trained it entirely on custom TPUs and proved you don’t need Nvidia’s supply chain to reach the frontier.

That’s today’s deep dive:

Reforge Build: AI that knows your product

Every AI prototyping tool spits out generic output. You spend days tweaking it to match your design system, your brand, your product.

Reforge Build starts with your context. Your design system. Your customers. Your product. Prototypes that already feel like yours on day one.

Get 1 month free with code: BUILD

For years, the narrative has been simple: bigger model = better performance. Throw more compute at the problem. But Gemini 3 proves something different: better architecture, better training methodology, and tighter control over infrastructure can change everything.

Google trained this entirely on their own TPUs. They can serve it at scale without touching Nvidia’s supply chain. And the performance is the kind of leap that makes everything before it feel outdated.

Three things that actually changed

1/ Multimodal from the ground up

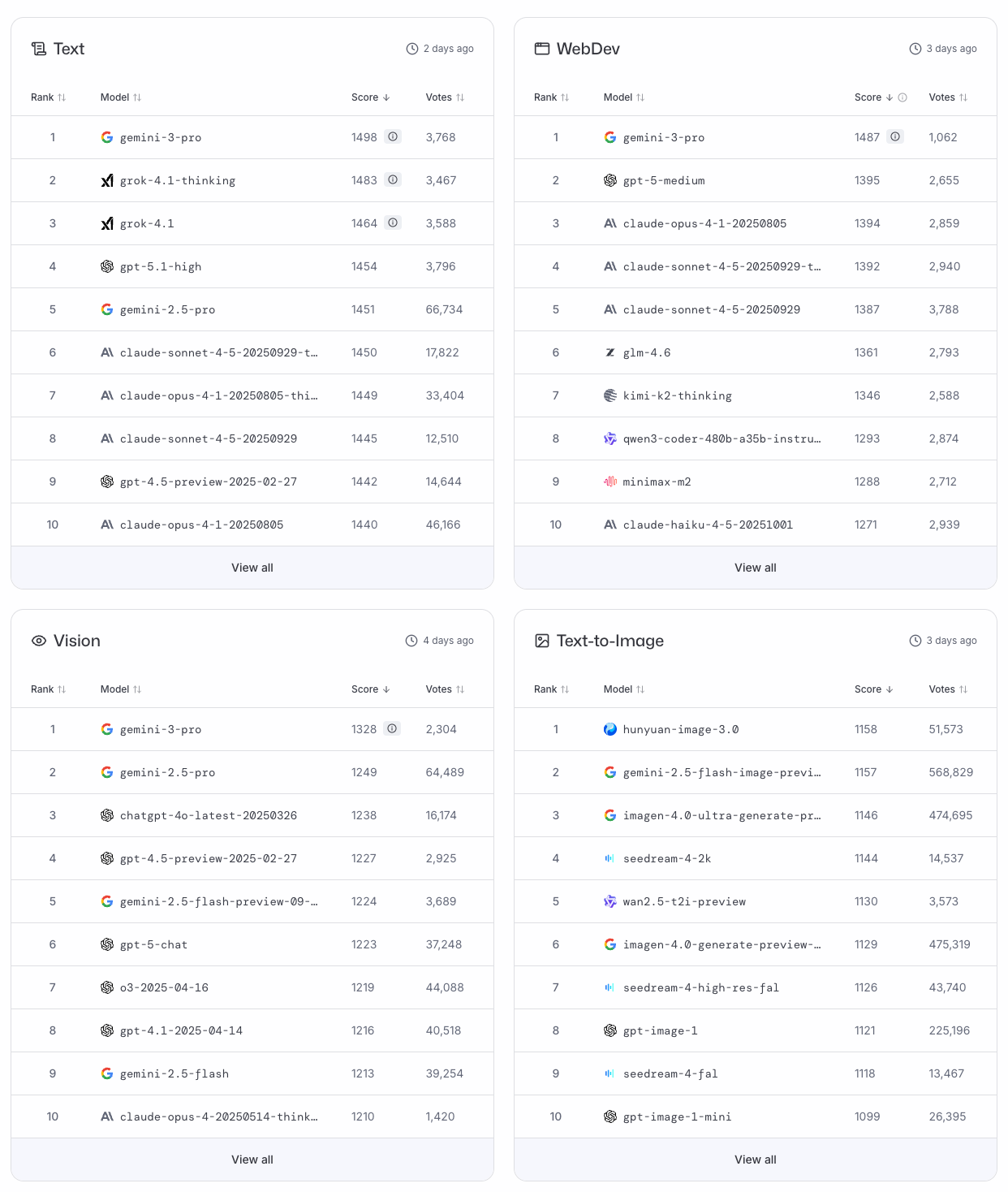

Text, images, video, code - Gemini shot to the top of all of the leaderboards, immediately dulling the news from earlier this week of Grok-4.1 beating GPT-5.1.

2/ State-of-the-Art reasoning

Gemini 3 excels at reasoning. It scored 91.9% on GPQA Diamond - a PhD-level science benchmark and 23.4% on MathArena Apex.

This matters when you’re working through multi-step problems, analyzing data, or trying to understand something technical.

3/ Solved image in text

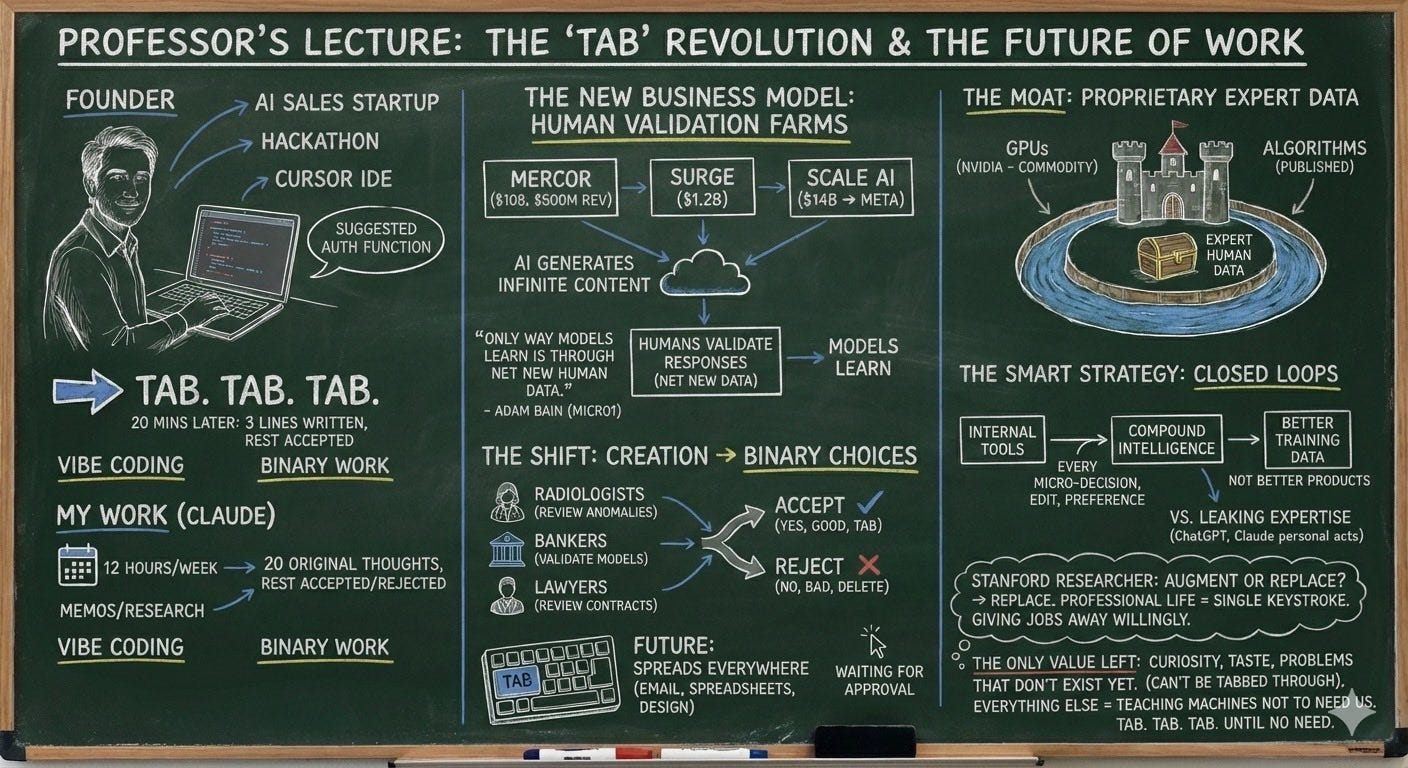

The Nano Banana Pro model that dropped this morning is one-shotting amazing text in images in a way that no model before it has. For instance, here’s a whole chalkboard from a blog post:

The infrastructure moat was just redrawn

The craziest thing of it all is Gemini 3 was trained entirely on Google’s TPUs. Not Nvidia chips. Not rented infrastructure. Google’s own silicon.

Now they’re lending those TPUs to Anthropic and Midjourney, which means the most capable AI companies in the world are now training on Google’s custom hardware.

This changes everything. For years, the narrative was simple: whoever has the most GPUs wins. Nvidia controls the supply chain. Nvidia’s moat wins.

But Gemini 3 proves something else. If you can build state-of-the-art models on your own chips, and you have the capital to manufacture them at scale, the game changes.

Google has capital. Google has training data. Google has proprietary TPUs. And now Google has proven these TPUs can reach the frontier.

Nvidia sells shovels. Google is building the mine.

If the AI race is a marathon, Google has the infrastructure advantage. That’s probably why Berkshire Hathaway keeps buying Google while sitting on massive cash. They’re seeing what most aren’t: the infrastructure play is the long-term play.

That’s it for today’s deep dive. Here’s everything else that mattered in AI this week:

Google also launched Antigravity: The agentic IDE

While everyone is talking about Gemini 3, I saw very few people are talking about another piece of news that matters almost as much.

Cursor has hit a $29B valuation and shown IDEs are a key AI market. Now, Google is playing there as well with Antigravity. It’s the result of Google $2.4B acquisition of Windsurf CEO Varun Mohan and his team four months ago.

Google has notoriously launched lots of IDEs it drops support for months later, but this one appears different because even Sergey Brin appeared in the launch video:

I’m skeptical it will win (Google doesn’t use it internally), but it’s worth monitoring.

News

Jeff Bezos is back as CEO at a new AI startup, Project Prometheus, with $6.2B

Replit added a visual design system to build sites without coding

Nvidia beat its guidance at earnings again

OpenAI launched GPT-5.1-Codex-Max

Grok launched Grok-4.1

Resources

20 mins to AI Agents

AI Prototyping tool comparison

Templates for your AI automations

New Tools

Manus Browser Operator: Turn any browser into an AI browser

Parallel: get high-quality datasets back from the web with 1 query

Ramp Sheets: AI spreadsheet editor built for finance teams & founders

Fundraising

Ramp raised $300M to build a full-scale finance-operations platform

Suno, the ai music maker, raised $250M

That’s all for today. See you next week,

Aakash