Nvidia’s Moat

Breaking down what really is behind Nvidia's "moat," and why the company has suddenly become so valuable

Nvidia’s $2.70T market cap has come stunningly fast for a company that was founded in 1993.

The story has been: Nvidia’s Data center business is blowing up. All the hyper-scalers - from AWS to and Azure and Google Cloud - can’t buy enough of Nvidia’s AI chips.

Year over year, in the latest quarter, Nvidia’s revenues have ballooned 101%, while its operating expenses are only up 10%. But market cap is the market’s prediction of sustainable future profits.

What are Nvidia’s? Any discussion of this quickly uses the word “moat” and throws in some tech like CUDA and H100s. I’ve found this conversation to be reserved to the surface level.

We’ll take a look at the actual software and hardware behind Nvidia’s “moat”—and how it stacks up to the competition.

Today’s Post

Words: 4,030 | Est. Reading Time: 19 mins

The world’s first AI supercomputer

Blackwell & the race for flops

The CUDA story

Where AMD stands

The Big Tech threat

The startups like Groq

The future of AI compute

Beyond AI: Nvidia’s future

Lessons for PMs and product leaders

1. The World’s First AI Supercomputer

People think that we build GPUs. But this GPU is 70 pounds, 35,000 parts. Out of those 35,000, 8 of them come from TSMC. It is so heavy you need robots to build it. It’s like an electric car. It consumes 10,000 amps. We sell it for $250,000. It’s a supercomputer.

—Jensen Huang, CEO of Nvidia

In the early 2010s, Jensen Huang gave a talk at a conference about an AI supercomputer he had been building. Elon Musk got wind of it, and said, “I want one of those.”

When it was finally ready, as the first delivery, Jensen took a photo op. It was the world’s first AI supercomputer, being delivered to OpenAI. And, unlike the rest of the world, Jensen knew how big of a deal that was. It’s amazing to hear him tell the tale:

In many ways, Jensen was the first semiconductor CEO to bet his company on the AI revolution.

What drove him and his team to that conviction level?

They were inspired by AlexNet, and the broader work done on neural networks by Ilya Stutskever (of OpenAI fame) and Geoffery Hinton (godfather of neural networks).

Neural networks are backward programs. They are programmed via the results you want. Most other software is written first, then tested versus the results you want.

This inspiration prompted Jensen and the Nvidia team to bet where the world was going to be in 2022—which they were right about, with ChatGPT’s release in November—AI computing.

So that’s really what Nvidia’s moat is: a moat around the hardware and software that is in its AI supercomputers.

Let’s tackle the hardware (section 2) and software (section 3) separately.

2. Blackwell and the Race for Flops

These AI supercomputers are used for both training and inference, but training tends to be the most limiting factor right now.

Training is typically measured in Floating point operations of compute. And Humans are using up all the flops that Nvidia makes available.

Organizations like Meta, Google, and OpenAI are training farms of Nvidia GPUs for hundreds, even thousands, of days for a single model.

Here’s how the math works out:

The latest OpenAI model had about 1.8 trillion parameters. Think of these as values directing nodes in the neural network

To train those parameters, OpenAI used 10 trillion tokens

To do that results in approximately 30 billion quadrillion floating point operations

In the latest Nvidia Hopper architecture, which can do 4 petaflops of operations per second of BFloat16 operations. At 40% utilization (typical for parallelization and real-world inefficiency costs), this would take 21 million Hopper hours (which cost ~$3/hour). So if you used 16,000 H100s, it would take 54 days.

The latest Blackwell architecture can do 5 petaflops of operations per second of BFloat16 operations. At 40% utilization, this would only take 4 million Blackwell hours. So, if you used 16,000 B200s, it would only take 11 days.

Beyond teraflops

Beyond Teraflops, Blackwell and Nvidia have the lead on all of the areas of AI parallel compute. One of the key factors that can significantly impact the training time of large AI models, such as GPT-4, is the communication bandwidth between GPUs.

Blackwell 18x’s Hopper, with 1.8 TB/s of bi-directional bandwidth. This can also significantly speed up training times, and is masked in the constant 40% MFU used above.

Blackwell continues to optimize all the little things to enable efficient scaling of AI workloads across large clusters of GPUs.

Reducing inefficiencies results in exaflops

That’s why some of the bigger releases out of Nvidia’s event this week were actually adjacent to GPUs.

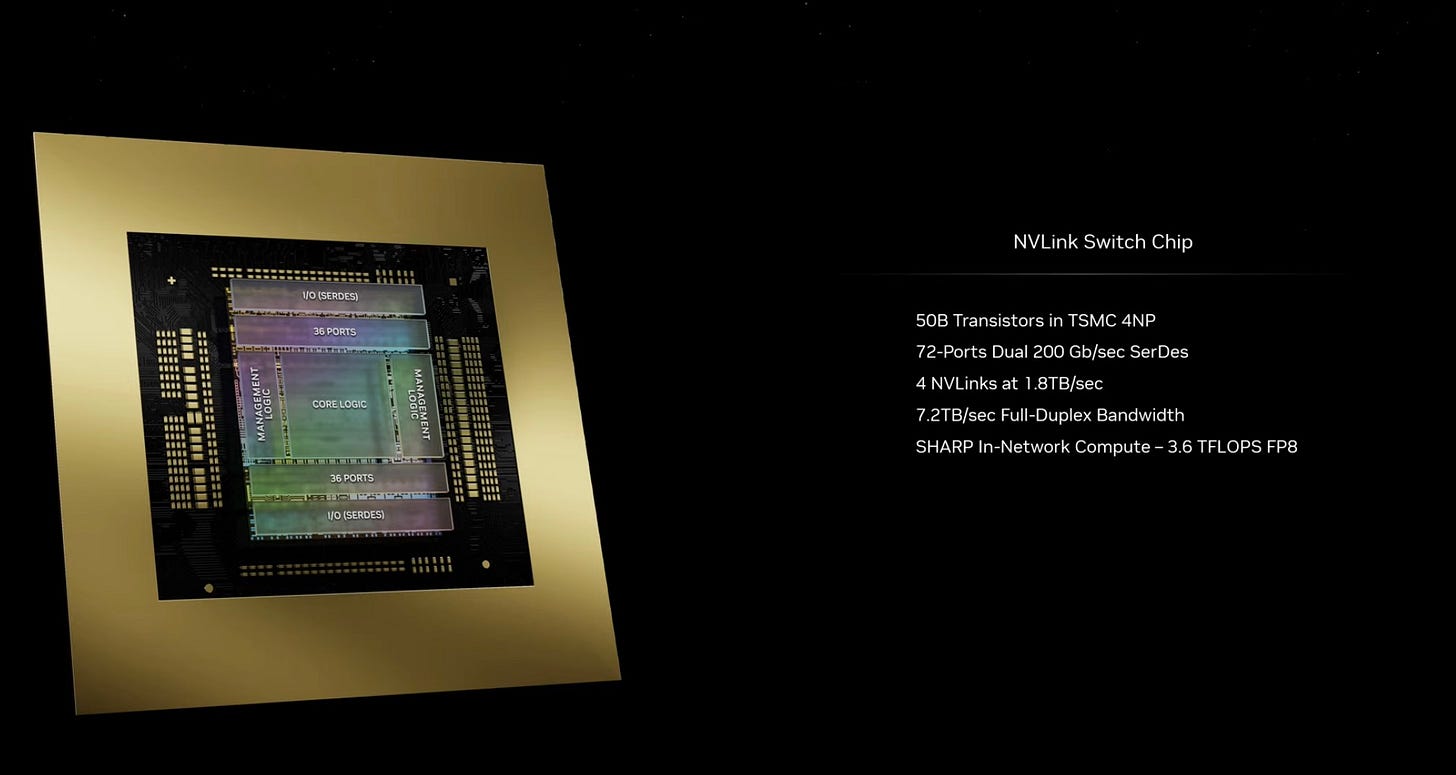

Take the NVLink switch chip. Jensen calls it a DPU, Data Processing Unit. This itself is 50 billion transistors in TSMC 4 nanometers with 4 1.8 TB/s NVLinks. It helps you tie together all your GPUs.

Combined with the latest Nvidia InfiniBand and ethernet switches, the latest Blackwell supercomputer, the GB200, produces training FP8 power of 720 PFLOPs.

They can do inference FP4 at 1.44 exaflops (1444 pflops). Today, there are only a few exaflop machines in the entire world. These are exaflop machines in single racks.

The implication of more exaflops

So you know what that means: OpenAI is going to increase the size and inputs into the model. Soon, Sora will have sound. And something like GPT-6 will have Sora. And GPT-10 will understand physics.

And that’s how it will continue with these large neural networks. OpenAI will increase the parameters, the passes of the training, etc as fast as the compute will allow.

That’s how we will eventually reach AGI, ASI, and beyond. And why Nvidia has such a long stream of future profits ahead.

Nvidia’s AI platform is hardly just hardware though.

3. CUDA: An Essential for Most AI Software Stacks

Nvidia’s future stream of profits is further secured by CUDA, the software that sits upon its GPUs.

In the mid 2000s, Jensen and the Nvidia team found researchers (professors, like my dad) stringing together multiple Nvidia GPUs and hacking graphics packages to conduct parallelized high-performance computing with them.

So when they met Ian Buck, who had the vision of running general purpose programming languages on GPUs, they funded his Ph.D. After graduation, Ian came to Nvidia to commercialize the tech.

Two years later, in 2006, Nvidia released CUDA, which stands for Compute Unified Device Architecture. CUDA made all those parallelization hacks for computations that those researchers were doing available to everyone.

Writing code in common and simple languages like C++, programmers can program the actual CUDA kernel function that controls how the GPU works. This allows a low-level of programming control that is not normal with normal hardware.

This has allowed researchers in a variety of disciplines to adapt to the CUDA architecture. Today, it’s an industry standard. All three of the top models today - LLama2, Claude Opus, and GPT-4 - were trained on it.

What CUDA allows is accessible customization of the low-level hardware. And developers love it. Nvidia’s market cap has reflected the broadening adoption at high scale of its hardware + software AI platform.

Beyond CUDA itself, Nvidia has a vast library of software under the CUDA-X umbrella that far outpaces the competition. Tools like cuDNN, Rapids, and TensorRT mean Nvidia has the most complete set of software to train and infer with large AI models.

These days, startups like MosiacML evaluate the available technology, and invariably choose the Nvidia ecosystem over the rest.

Now let’s dig in with specificity to how these products stack up against the competition.

Keep reading with a 7-day free trial

Subscribe to Product Growth to keep reading this post and get 7 days of free access to the full post archives.