DeepSeek is Back with Gemini-3 Performance, But Cheaper: AI Update #6

Plus: 4 Nano Banana Pro prompts you can ship as features, and how to price AI without killing your margins.

Welcome back to the AI Update.

The reasoning gap closed faster than expected this week.

DeepSeek released V3.2, matching Gemini-3.0-Pro’s capabilities while cutting costs by 70%.

What does this mean for the AI race? I’ll break it down in today’s weekly update.

Plus, I’ve been testing more Nano Banana Pro prompts. Last issue I shared 5. In today’s deep dive I’ll share 4 prompts that are useful.

Finally, I’ll summarize my recent sit down with Kyle Poyar about AI pricing. The short version: most AI features fail because they don’t move retention, not because of pricing.

Dazl: The AI Prototyping Tool Built for PMs

Are you hitting a wall at 80% when vibe coding? That’s what Dazl solves. Unlike other tools, they allow you to edit everything with full control & precision. Check them out!

Get 1 month free with code: DazlXPG

The reasoning gap wasn’t supposed to close this fast

While OpenAI, Google, Anthropic, and xAI trade the #1 spot weekly, something different happened on Sunday. DeepSeek dropped two models that match frontier performance and made them completely free.

DeepSeek-V3.2 is the daily driver. It performs at GPT-5.1-High level across reasoning benchmarks while supporting tool-use in both thinking and non-thinking modes.

DeepSeek-V3.2-Speciale is the reasoning specialist. It earned gold medals in the 2025 International Mathematical Olympiad, International Olympiad in Informatics, and ICPC World Finals.

What actually changed:

1/ Frontier reasoning at 70% lower cost: A new attention mechanism slashes inference costs dramatically. Processing 128,000 tokens (roughly a 300-page book) now costs about $0.70 per million tokens, down from $2.40 on their previous model.

2/ Thinking while using tools: Previous AI models lost their train of thought every time they called an external tool. V3.2 preserves reasoning across multiple tool calls - essential for complex agent workflows.

3/ Benchmarks that matter for builders: On AIME 2025 (math): 93.1% for V3.2, 96.0% for Speciale vs. GPT-5-High’s 94.6% On Terminal Bench 2.0 (coding agents): 46.4% vs. GPT-5-High’s 35.2%

The catch: Token efficiency still lags Gemini-3.0-Pro, and DeepSeek acknowledges their world knowledge remains behind frontier proprietary models.

Why this matters: The 4-horse race (OpenAI, Google, Anthropic, xAI) is about who has the best model. DeepSeek is running a different race: making frontier-capable AI free under MIT license. For teams building agent-heavy applications where cost scales with usage, this changes the math entirely.

There’s a billion AI news articles, resources, tools, and fundraises every week. Here’s what mattered:

News

Anthropic targeting $350B IPO at zero markup (while OpenAI waits for $1T). IMO they are optimizing for enterprise trust, not valuation.

OpenAI declared “code red” on ChatGPT threats and delayed ads. The real threat: Google.

NVIDIA launched an open self-driving model that thinks before it acts.

Resources

Automate everyday work with Google’s AI agents

How to go from core PM to AI PM: Todd Olson’s 5-layer technical pyramid

New Tools

Aha: AI employee for influencer marketing hit #1 on Product Hunt

X-Design: AI Agent for branding hit #1 on PH

Market

Anthropic acquired Bun (open-source JavaScript toolkit) and announced Claude Code hit $1B ARR. Six months after launch.

AI voice startup, Gradium raises $70M.

And now on to today’s deep dive:

4 Helpful Nano Banana Pro Prompts

Last week I shared five Nano Banana Pro prompts and you loved them. But I kept testing and have now found 4 even more game-changing prompts:

1/ 3D Cartoon Weather Visualization

Turn any weather app from boring numbers into something users screenshot and share.

Present a clear, 45° top-down isometric miniature 3D cartoon scene of [CITY], featuring its most iconic landmarks and architectural elements. Use soft, refined textures with realistic PBR materials and gentle, lifelike lighting and shadows. Integrate the current weather conditions directly into the city environment to create an immersive atmospheric mood. Use a clean, minimalistic composition with a soft, solid-colored background. At the top-center, place the title “[CITY]” in large bold text, a prominent weather icon beneath it, then the date (small text) and temperature (medium text). All text must be centered with consistent spacing, and may subtly overlap the tops of the buildings. Square 1080x1080 dimension.2/ Fridge Scanner → Recipe Infographic

The feature every food app has promised but never delivered well. Now it works.

Scan what’s inside of the fridge and offer me an idea what can be cooked with the ingredients available with a detailed step by step recipe in a form of a simple infographic.3/ Multilingual Object Labeling

If you’re building anything for language learners or global markets, steal this.

Draw a detailed {{pet shop}} scene and label every object with English words. Label format: First line: English word. Second line: IPA pronunciation. Third line: {Chinese} translation.Credit: Crystal on X

4/ 3D Packaging → 2D Dieline

This one shocked me. Turn product photos into production-ready packaging templates.

Deconstruct this 3D product packaging photo into a precise, flattened 2D dieline template. Unfold the box into a complete technical net structure, revealing all panels, including previously hidden dust flaps, tucks, and glue tabs. Eliminate all perspective and lens distortion to create a perfect orthographic top-down view. Retain the exact high-fidelity texture and typography from the original photo but map it accurately onto the flat surface. Superimpose clean, technical die-cut lines (solid) and fold lines (dashed) in a contrasting color. Isolated on a pure white background, shadowless, print-ready production quality.

Finally, onto insights from a webinar I did this week:

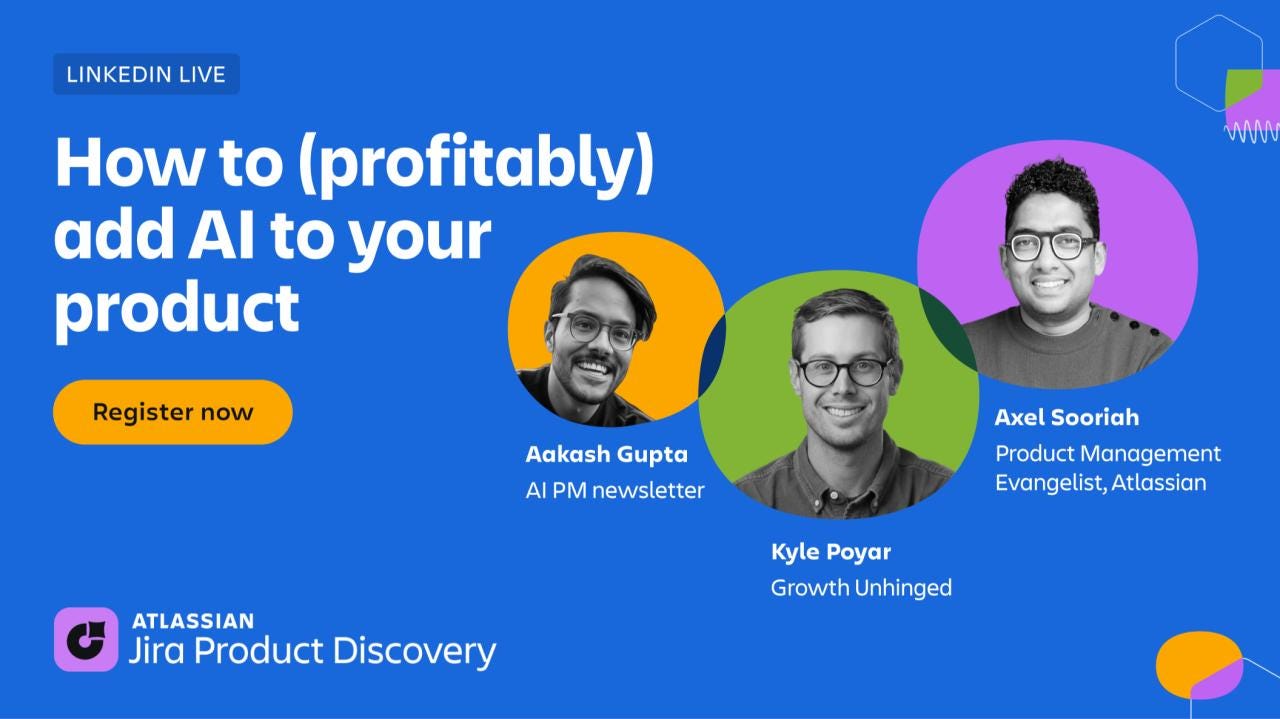

How to (profitably) add AI to your product

I sat down with Kyle Poyar (Growth Unhinged) and Axel Sooriah (Atlassian) to talk about adding AI to products profitably. Here’s what PMs get wrong and how to fix it.

Why most AI features fail (it’s not pricing)

The intuitive answer is “we mispriced it.” The real answer: the feature doesn’t move retention.

At Apollo, we shipped an AI email writer when ChatGPT exploded. Pricing was fine. The problem: users didn’t accept the AI drafts. It never became part of their workflow, so it didn’t move retention.

Compare that to Fortnite’s AI bot system. New players were getting crushed by hardcore grinders and rage-quitting. Epic added AI opponents as “cannon fodder” to smooth the on-ramp. Over 2.5 years and thousands of features, this was the #1 retention driver.

If your AI feature doesn’t measurably improve retention, pricing won’t save it.

The “hill climbing” approach to AI costs

PMs often optimize costs too early. “We can’t call that model - it’s too expensive.” This kills features before they prove value.

Instead:

Define evals upfront: what does “good enough” look like?

Climb the quality hill first: use whatever models you need to hit those evals, even if unprofitable

Then climb down the cost hill: swap to cheaper models, reduce context windows, add caching

Figma is climbing up (proving PMF for Figma Make). Airbnb is climbing down (shifting from commercial APIs to open-source models after validating value).

You can’t penny-pinch your way out of a bad use case.

Why hybrid pricing is winning?

Per-seat pricing is dangerous for AI. Your top 10% of power users can drive 70-80% of LLM spend. Charge everyone the same, and your best users become your least profitable.

What’s working: seat/platform fee + usage component.

Credits are becoming the standard. They give you a universal currency across AI actions and flexibility to adjust costs as models change without rewriting your entire pricing story.

The one takeaway: Solve really hard, high-dollar problems. If your AI reliably removes a $30 human task for $2 in compute, pricing details are solvable. If you’re adding a chatbot no one asked for, everything else becomes painful.

That’s all for today. See you next week,

Aakash