How would you measure the success of GPT-6?

What evals would you look at for the success of our AI agent launch?

Sound tough, right?

These are the types of questions companies are asking in AI product success metrics interviews.

It’s a 30-45 minute case interview that everyone from OpenAI to Meta - and even companies like Ford - are taking up.

So if you want to land a high-paying AI PM job, it’s a must to master.

But There is No Content

Unfortunately, there is literally ZERO content online about these questions!

Everything you find online is just about success metrics.

But success metrics for AI are quite different:

You need to consider offline evals

You have to handle the non-deterministic nature of AI

You're always balancing latency against output quality

Models drift over time

And so much more…

That’s why you need a guide to the AI product success metrics interview specifically.

Continuing my AI PM Interview Series

I’ve been working to help you crush AI PM interviews. We’ve covered AI product sense and AI product design.

Today, we’re back with the next most important interview to nail: AI product success metrics.

Today’s Post

I’ve put together the web’s deepest guide to the AI success metrics interview:

Mock interview

What they are evaluating

The framework to use to ace these

The companies asking, their formats, and questions

Anti-patterns that kill candidates

83-question practice bank

3 worked examples

My practice GPT

1. Mock Interview

To get yourself into the right mindset to ace this case, I’ve recorded an end-to-end mock interview on this topic.

We answer a question that many companies have been asking:

How would you measure the success of our new agents launch?

Do you want my coaching to perform like this? Join my 12-week accelerator where we prepare you on every step of the job search:

It starts Monday. We only have 2 seats left, and the next one will be all the way in May. So if you’ll be job searching soon, join.

2. What They Are Evaluating

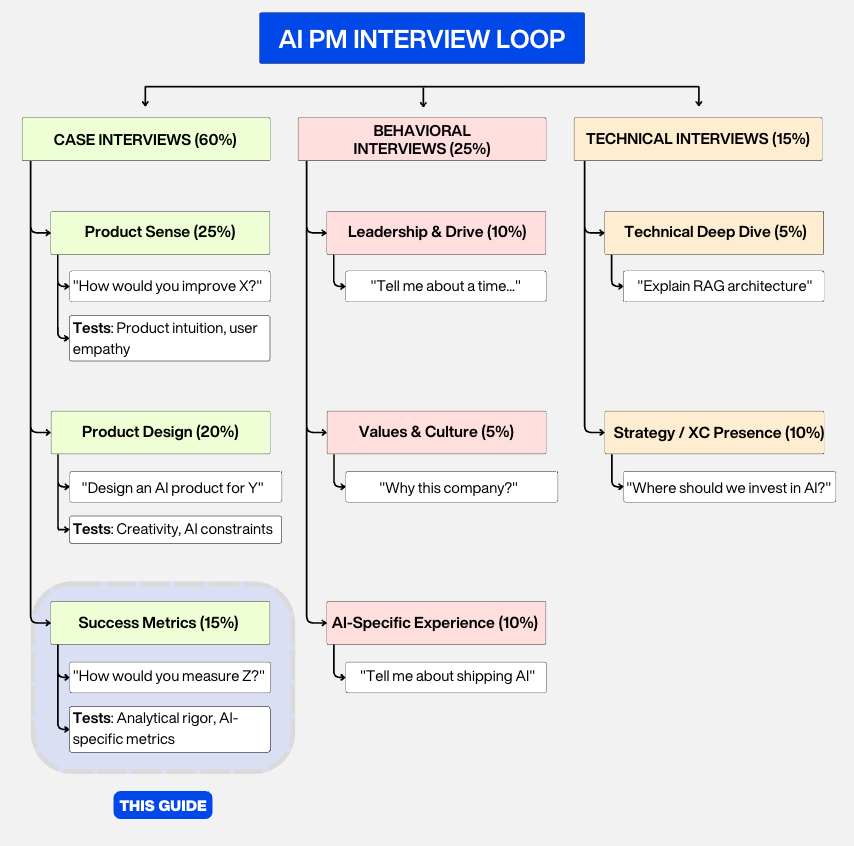

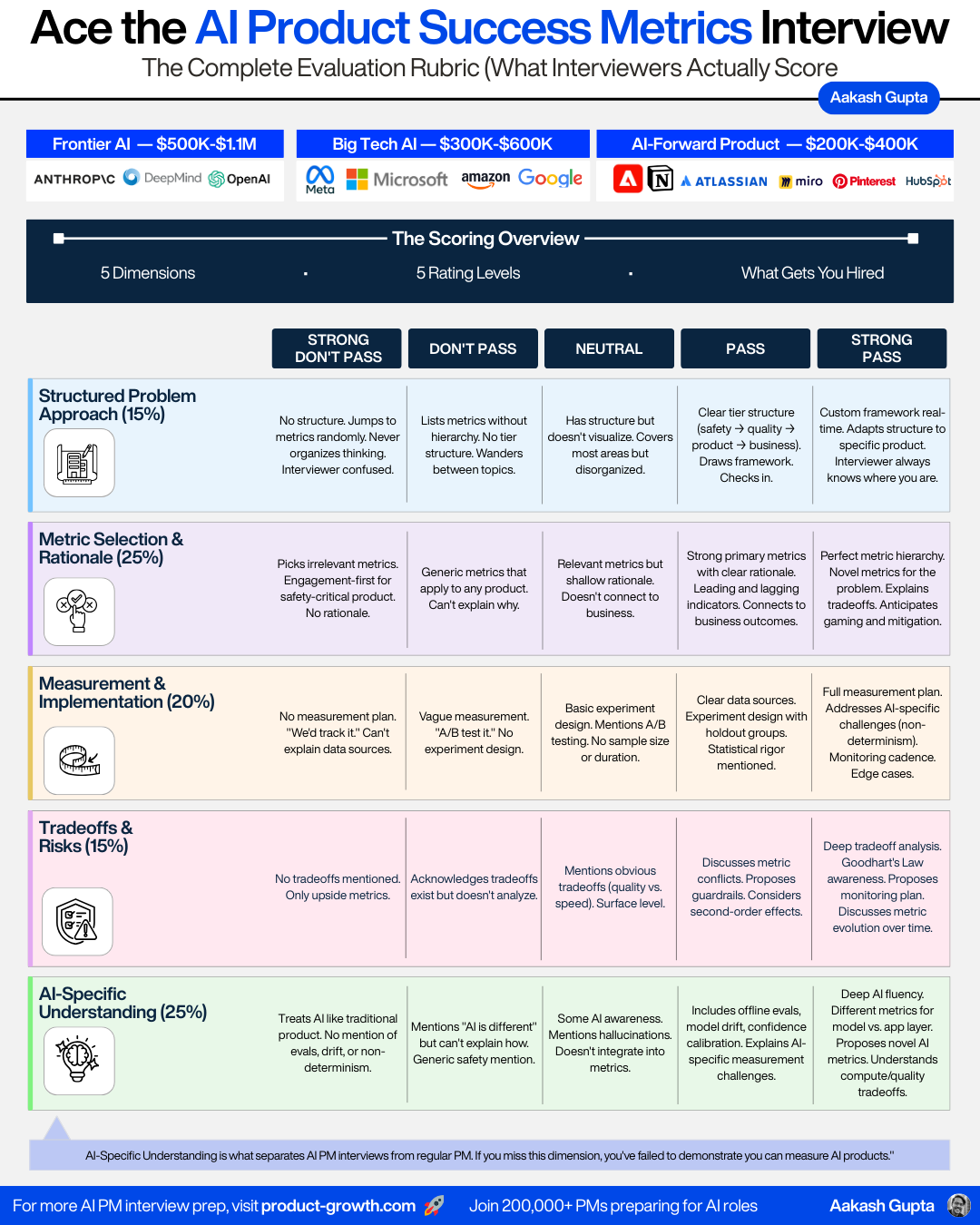

I talked to a few hiring managers and successful candidates. Here’s how to think about what they’re looking for. They want you to demonstrate:

Structured problem approach (15%): ideally with a custom framework that’s easy to follow

Metric selection & rationale (25%): with a strong hierarchy that addresses topics like gaming

Measurement & implementation (20%): you need to be able to to show exactly how you’ll operationalize metrics

Tradeoffs & risks (15%): some candidates forget this altogether, but they want to see deep tradeoff analysis

AI-specific understanding (25%): they want you to demonstrate deep AI metrics fluency

So how do you ace this rubric? That’s the rest of today’s deep dive: the framework to use, how companies vary in what they’re looking for, and worked examples.

Keep reading with a 7-day free trial

Subscribe to Product Growth to keep reading this post and get 7 days of free access to the full post archives.