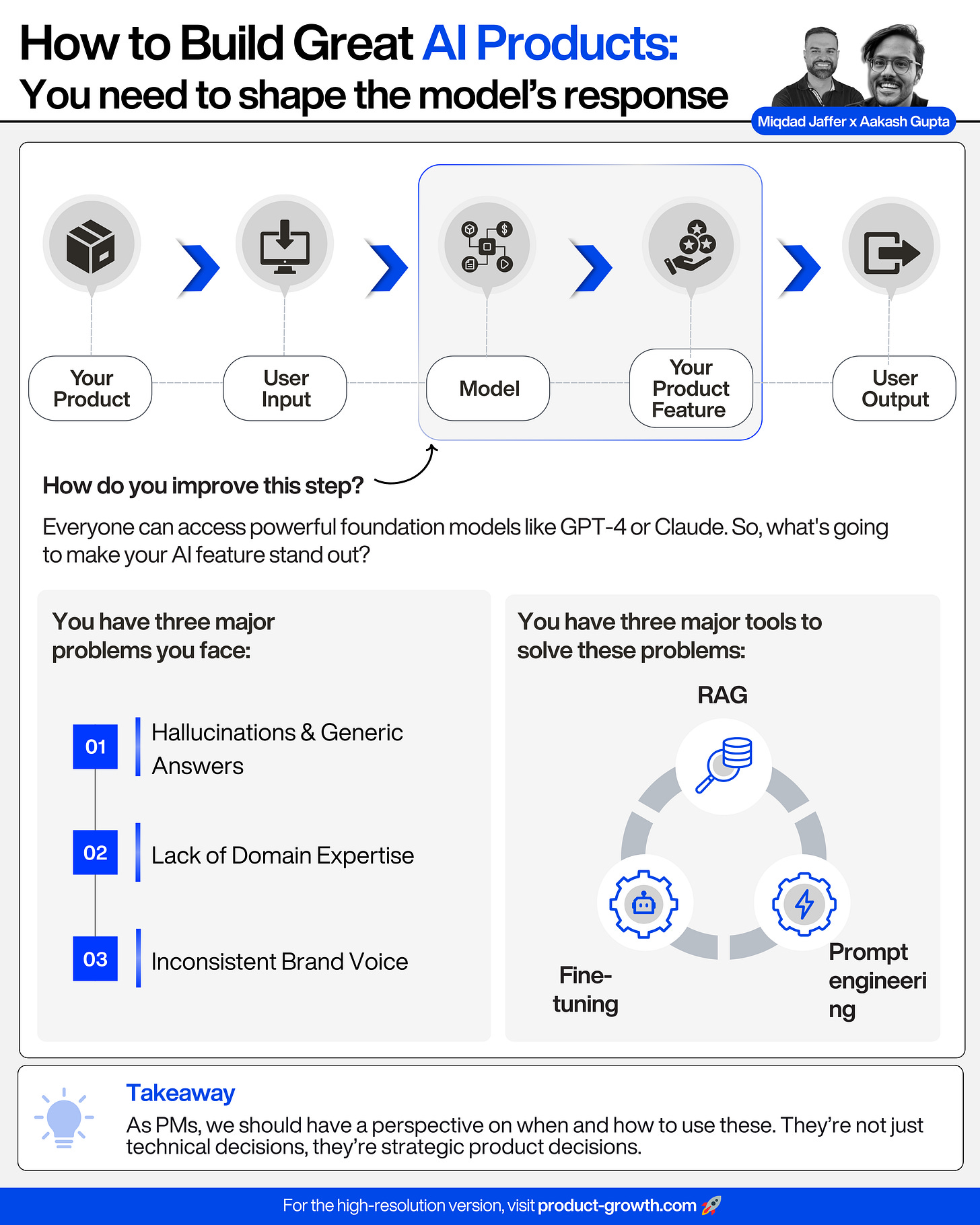

Context Engineering Guide: Step-by-Step RAG, Fine-tuning, and Prompt Engineering

How do you build AI features that actually work for your product instead of generic AI wrappers? Today we walk you through the implementation and decision frameworks you need.

We can all come up with great ideas for LLMs to enhance our products, but the devil is in the details:

How do we get our AI to adopt a very specific writing style?

How do we get our AI to use our latest product documentation?

What's the fastest way to test an AI concept and get it into users' hands for feedback?

These aren't just technical questions. They're strategic product decisions.

Everyone can access powerful foundation models like GPT-4.5 or Claude Opus 4. So, what's going to make your AI feature stand out?

Hint: It's not just about having 'AI'.

It's about making that AI uniquely yours and genuinely useful.

The 3 Approaches to Optimizing AI

Base LLMs are like brilliant interns: incredibly capable, but they don't know your company's specific jargon, your proprietary data, or the nuanced style your customers expect.

Leaving them "off-the-shelf" often leads to:

Hallucinations & Generic Answers

Lack of Domain Expertise

Inconsistent Brand Voice

You have three major ways to address these problems:

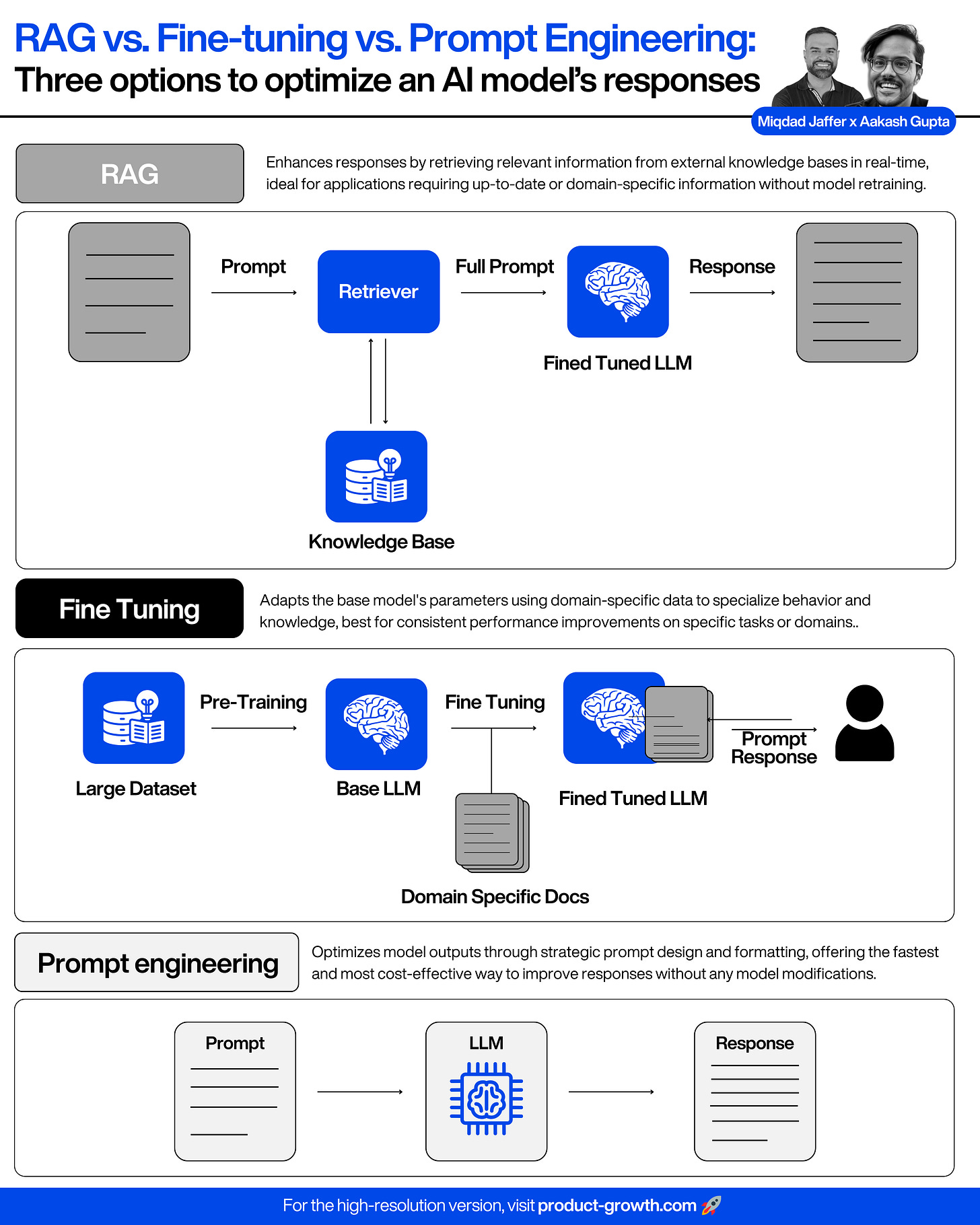

RAG (Retrieval Augmented Generation)

Have a model do a search to enhance its results with information not in its training data set, then incorporate those findings into its answer

Fine-tuning

Specializing a model based on information

Prompt engineering

Better specifying what you’re looking for from the model

The question is: when should you use each, and why?

Today, we’re breaking down the definitive framework for choosing between prompt engineering, RAG, and fine-tuning.

Introducing Your Co-Author

To make sure it’s definitive, I've partnered with Miqdad Jaffer, Director of PM at OpenAI.

Miqdad teaches the AI PM Certification course where he’s helped 100s of students master these exact techniques through hands-on projects.

Use my code AAKASH25 for $500 off. The next cohort starts July 13th:

Today’s Post

We’re going to give you the context, decision frameworks, and a practical step-by-step walkthrough to help you build the intuition. Once you build each on your own, you’ll have that AI engineer level knowledge to speak with them confidently:

Mistakes

Pros and Cons

Decision Framework

Step-by-Step: Building Each

How Much Each Actually Costs

Think of this post more like a course-lesson where you have to follow along than an article.

TL;DR: Start with prompt engineering (hours/days), escalate to RAG when you need real-time data ($70-1000/month), and only use fine-tuning when you need deep specialization (months + 6x inference costs).1. The 3 Major Mistakes We See

Let's start by understanding what not to do, then build up to the right approach.

Keep reading with a 7-day free trial

Subscribe to Product Growth to keep reading this post and get 7 days of free access to the full post archives.