The PM's Guide to Testing AI Features

How AI has upended QA and made it a key PM skill

You can’t escape it. Every product team is being asked to ship AI features. Fast.

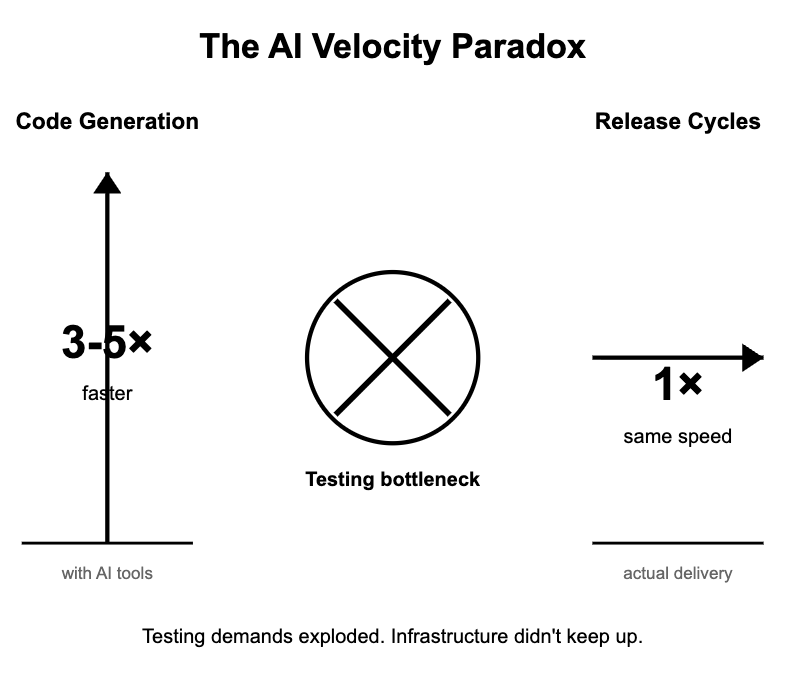

And development teams are shipping AI code 3-5x faster than before using tools like Cursor and Claude Code. But, their actual release cycles haven't sped up at all.

Why? Because traditional testing approaches can’t handle AI’s unique challenges.

A Series B SaaS company I spoke with last week had their AI feature builds taking 4 hours. Their traditional web features? 15 minutes. Same codebase, same infrastructure. The difference? AI testing complexity that their traditional workflows couldn't handle.

The result? You're promising faster AI feature delivery, but your actual release cycles are hitting unexpected bottlenecks because traditional testing workflows weren't designed for AI outputs.

Today's Post

Most PMs are shipping AI features without understanding how their role in testing needs to fundamentally change.

But the PMs who understand AI testing? They're shipping faster, with higher confidence, and building better products.

I’m bringing you the first practical guide to testing AI features as a PM:

Why AI testing is the new core skill every AI PM needs

The 4 ways AI features break traditional testing approaches

The AI PM’s Tech Stack (yes, you have one now)

Step-by-step case study with real metrics

3 infographics to share with your team

1. Why AI Testing is the New Core AI PM Skill

Six months ago, being an AI PM meant knowing how to write prompts and pick the right model.

Today, it's about understanding how to ship AI features reliably at scale.

The landscape has shifted. Companies like Anthropic, OpenAI, and Google have commoditized the models. Prompt engineering is table stakes. What separates winning AI PMs from the rest?

Operational excellence in shipping AI products.

That’s why I’ve written so much recently about evals, observability, and LLM judges.

They are part of one bigger AI topic: Testing.

And the PMs who understand AI testing holistically have a massive advantage:

They ship faster because they catch issues earlier

They have higher confidence in releases because they understand quality

They build better products because they design testability from day one

They get promoted because they deliver results, not just features

Think about it. Your CEO doesn’t care if you can write a great prompt. They care if you can ship AI features that work, scale, and don’t break the core product.

2. The 4 Ways AI Features Break Traditional Testing

Traditional features are predictable. Given input A, you get output B. Every time.

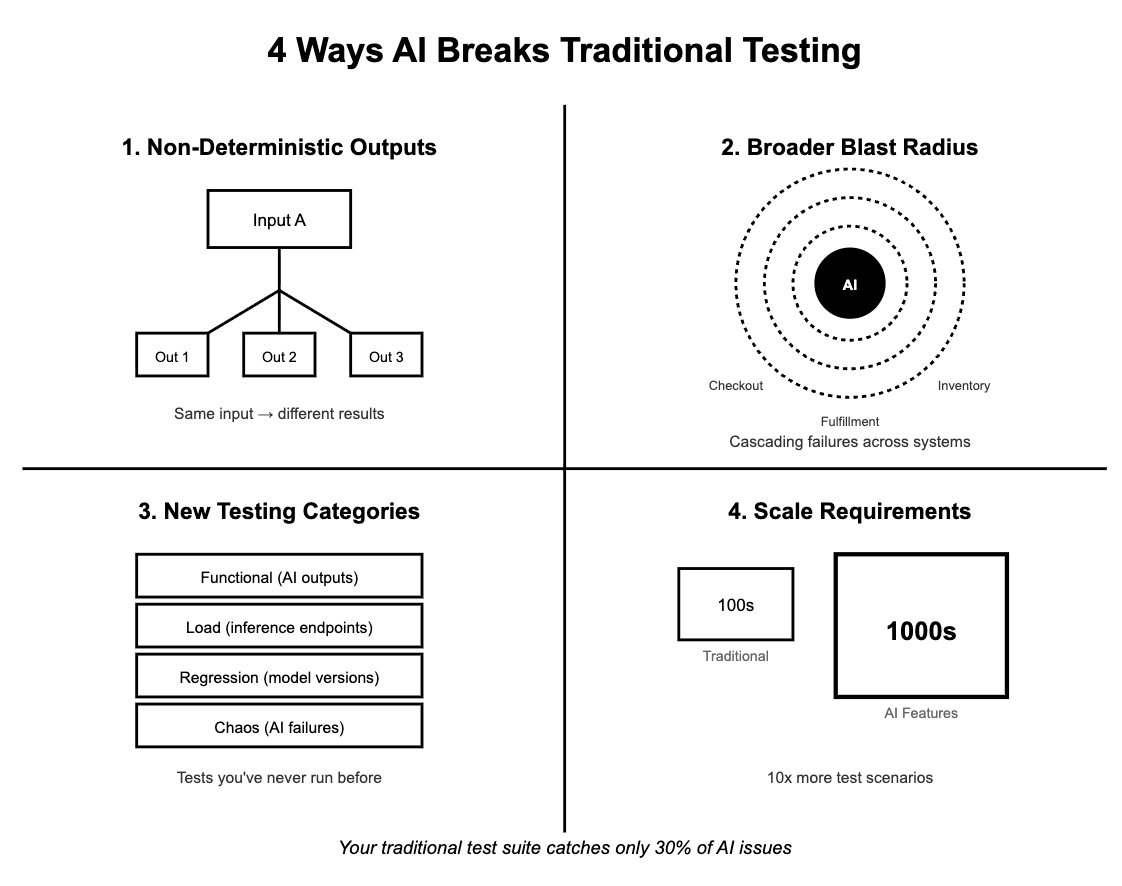

AI features? Not so much. Here are the 4 fundamental ways AI breaks traditional testing:

1. Non-deterministic outputs mean the same input can produce different results. Your chatbot might give a perfect answer on Tuesday and complete nonsense on Wednesday. Both responses pass your existing unit tests.

2. Broader blast radius across systems. Your AI chat feature doesn't just affect the chat module. It can break checkout flows in unexpected ways. When an AI recommendation engine starts suggesting out-of-stock items, it cascades through inventory, fulfillment, and customer service systems.

3. New testing categories you've never had to think about:

Functional testing for AI outputs (not just code paths)

Load testing for inference endpoints under real traffic

Regression testing across model versions

Chaos testing for when AI systems fail (and they will)

4. Scale requirements that break traditional approaches. AI features generate thousands of test scenarios. Your old test suite was designed for hundreds.

The result? Your traditional test suite catches maybe a fraction of the issues that matter for AI features.

As Dmitry Fonarev, CEO of Testkube, puts it:

"The side effect of AI generating more code is that the overall testing of software applications becomes more critical. We can't just run a small set of tests against a subsystem anymore. We need to validate what an API change may have on second, third, or fourth order systems behind it."

Translation for PMs: Your tests must anticipate model drift, not just code changes. Otherwise you’re stuck managing regressions you didn’t cause.

For a deeper dive into the 7 testing categories you must know, check out my complete AI testing taxonomy guide:

From Problem to Solution

You understand the problem. Now get the solution:

3-element testing framework you can implement this week

The AI PM’s tech stack and architecture guide with ready-to-share infographics

Real case study: How one team cut builds from 4 hours to 20 minutes

Conversation framework for every team you work with

The AI PMs who master testing infrastructure will dominate. The ones who don't will manage firefights instead of building products.

Keep reading with a 7-day free trial

Subscribe to Product Growth to keep reading this post and get 7 days of free access to the full post archives.