Session Replays: Advanced Techniques for Product Managers and Product Leaders

How can you use this new tool to drive better product decisions? I go deep on one of the most overlooked weapons in product teams' arsenal: session replays.

Analytics can lie to you.

At thredUP, our dashboards showed a perfectly healthy pricing system. Conversion rates looked good. Revenue per visitor was up. Our A/B tests were winning.

But customer support kept getting the same message:

"These prices don't make sense."

When we finally dug deeper, we discovered customers bouncing between similar items, confused by price differences. They were comparing dozens of products trying to understand our logic.

The metrics couldn't show us these behavior patterns. Customer interviews only gave us post-rationalized explanations.

It wasn't until we watched users shop that we understood the real problem: our "optimized" pricing was creating cognitive load.

Pure data-driven development has limits. Numbers tell you what happened, but not why.

The best product decisions come from combining quantitative data with qualitative understanding.

Brought To You By LogRocket

LogRocket is the AI-first session replay and analytics platform that proactively identifies opportunities to improve your websites and mobile apps. Its Galileo AI is your 24/7 product analyst, watching every user session and developing a human-like understanding of the user experience and struggle.

LogRocket helps leading PLG companies build better products by surfacing the biggest opportunities to improve digital experience. And thanks to its Galileo AI, you don’t need to manually watch hundreds of sessions or wait for users to complain to find the areas ripe for optimization.

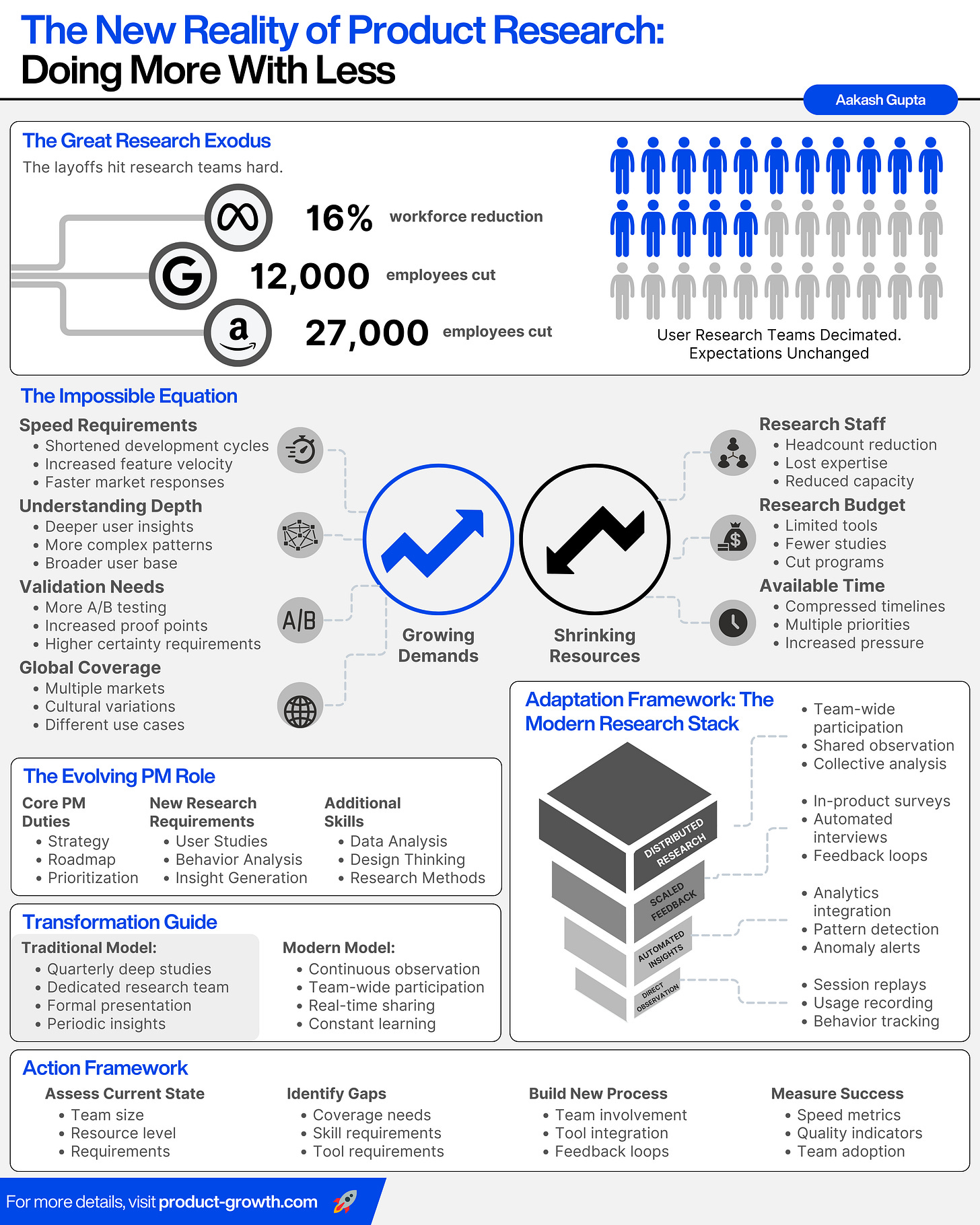

Why It Matters: Product Teams Are More Stretched Than Ever

The layoffs hit research teams hard.

Meta cut 13% of their workforce. Google 12k employees. Amazon 27k.

Which function tended to get very hard on those layoffs? In the product development side of the house… user research teams were decimated. Many companies lost 30-50% of their researchers.

But the expectations haven't changed. Teams still need to:

Understand user behavior

Validate product decisions

Ship faster than ever

Do more with less

The old model of periodic user research studies is breaking.

Product managers are now expected to be researchers, analysts, and designers - all at once.

We need ways to scale user insights across teams.

The Return to Direct User Observation

The best product teams have always watched users.

Gaming companies mastered this years ago. They put players in rooms, watched them play, recorded their reactions.

But most product teams can't do this anymore:

Remote work changed everything

Research teams are smaller

Development cycles are faster

Users are global

We need tools that bring back direct observation - at scale.

That's where session replays come in.

Not because they're perfect. They're not.

But because they let us see reality again.

Today's Post

Words: X | Est. Reading Time: X mins

I've led product at multiple high-growth companies, from startups to unicorns. Today I'm sharing everything I've learned about using session replays effectively:

When to use Session Replays

How to Extract Real Insights from Session Replays

Your Session Replay Practice

Common Pitfalls

The Future of Product Development

1. When to use Session Replays

Session replays are fundamentally a tool of observation, not interpretation.

This single characteristic determines everything about when they're valuable - and when they're not.

Principle 1 - Observation vs. Interpretation

Think about product development questions in two categories:

Observable Questions:

How do users navigate?

Where do they get stuck?

What paths do they take?

When do they hesitate?

Interpretive Questions:

Why did they choose our product?

What features should we build?

How much would they pay?

What problems matter most?

Session replays can only answer observable questions. They show behavior, not motivation.

This gives us our first principle: Use session replays when seeing behavior answers your question.

Principle 2 - Time

Product questions also exist across time horizons:

Current Behavior:

How are users using the product now?

What problems exist today?

Where is friction happening?

Future Possibilities:

What could the product become?

What new problems could we solve?

How might behavior change?

Session replays can only show current behavior. They're rooted in today's reality, not tomorrow's potential.

Second principle: Use session replays to understand what is, not what could be.

Principle 3 - Scale

Session replays provide depth at the expense of breadth. Here’s what I mean.

Deep Understanding:

Individual user journeys

Specific interaction patterns

Exact friction points

Broad Understanding:

Market trends

Feature adoption rates

Overall user satisfaction

Session replays are a microscope, not a telescope.

Third principle: Use session replays when depth matters more than breadth.

Putting It Together: When to Watch

These principles give us clear guidance on when session replays are valuable:

High Value

Problem Validation

Observable current behavior

Need depth of understanding

Specific friction to watch

Launch Monitoring

Current user interaction

Specific flows to observe

Need detailed insight

Low Value

Problem Discovery

Requires interpretation

About future potential

Needs broad understanding

Strategic Decisions

Beyond observable behavior

About future direction

Requires market breadth

To illustrate: At Apollo.io, session replays transformed our pricing page optimization.

Not because they told us what to build, but because they showed us exactly how users struggled with what we had built.

Normally there would be a paywall here. But thanks to LogRocket, this entire deep dive is free for all subscribers.

Reach out to their team to start a free 14 day trial of LogRocket and their Galileo AI.

2. How to Extract Real Insights from Session Replays

Most teams watch sessions wrong.

They pick random sessions. Watch them end-to-end. Hope for insights.

Let's fix that.

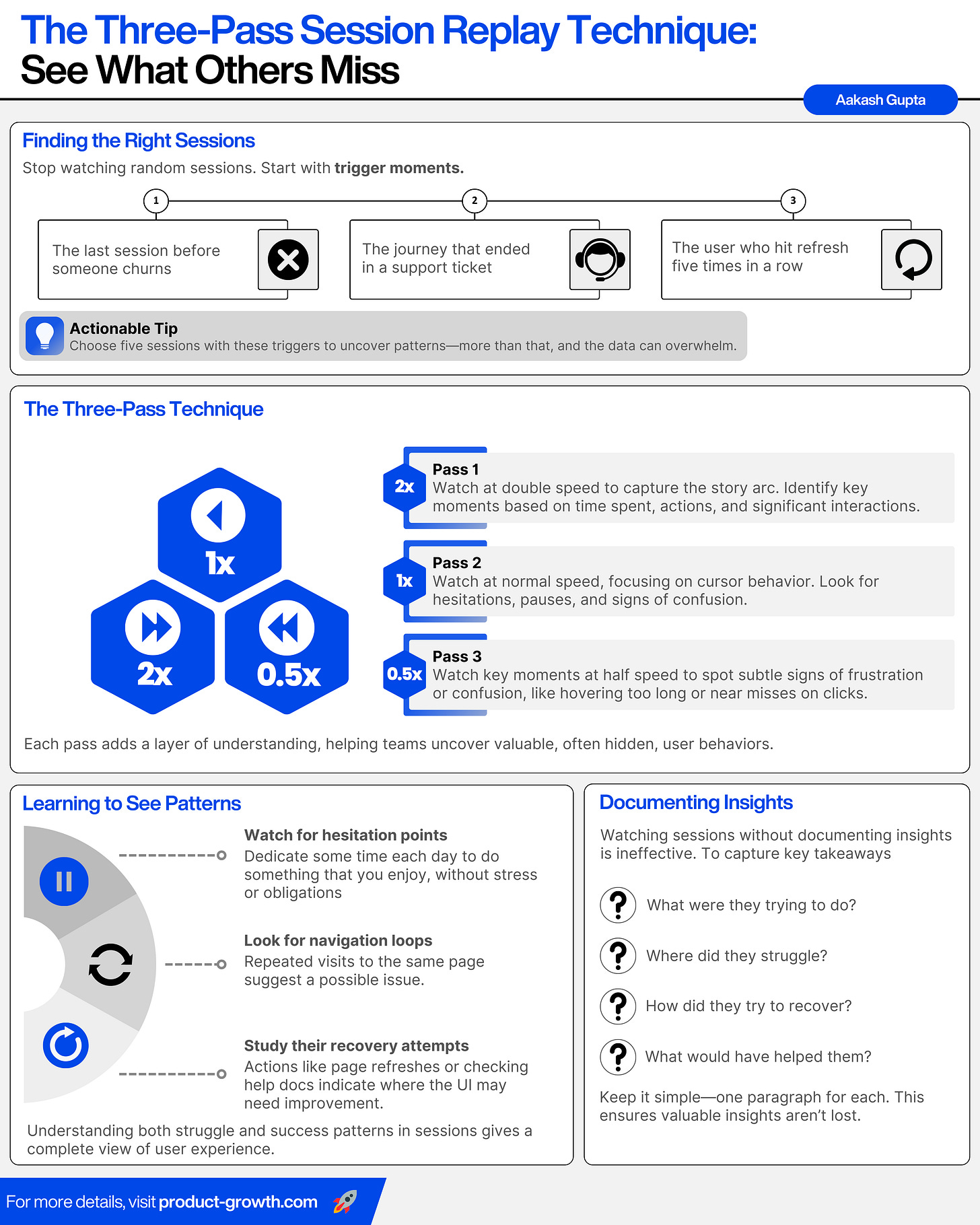

Finding the Right Sessions

Stop watching random sessions. Start with trigger moments.

The most valuable sessions come from specific behaviors:

The last session before someone churns

The journey that ended in a support ticket

The user who hit refresh five times in a row

These moments tell stories.

Pick five of them. That's enough to spot patterns. More than that and you'll get lost in the noise.

The Three-Pass Technique

Watching sessions is a skill. Like any skill, it needs a system:

Pass 1

Start with the timeline. Watch at double speed. You're looking for the story arc - how long did they stay? What were they trying to do? What moments mattered?

Mark those key moments. You'll come back to them.

Pass 2

Now slow it down. Watch at normal speed. This time, you're studying their movements. Where does the cursor hesitate? When do they stop? What confuses them?

Pass 3

Finally, zoom in. Take those key moments you marked and watch them at half speed. This is where the real insights hide.

A cursor that hovers for three seconds too long. A frustrated click that almost hits its target. A moment of confusion that leads to abandonment.

These micro-moments tell you everything.

Learning to See Patterns

Session replays speak their own language. You need to learn to listen.

Watch for hesitation points. The cursor that dances between two options. The back-and-forth movement that screams confusion. The almost-clicks that signal uncertainty.

Look for navigation loops. Users don't wander aimlessly. When they return to the same page three times, they're telling you something's wrong.

Study their recovery attempts. Every page refresh is a mini-failure. Every return to the help docs is a moment your UI failed them.

But don't just study failure. Watch for success too.

The users who flow smoothly through your product tell you as much as the ones who struggle. Their paths become your north star.

Documenting Insights

Watching sessions without documenting insights is like reading without taking notes. The knowledge slips away.

Keep it simple. For each session, capture the story:

What were they trying to do?

Where did they struggle?

How did they try to recover?

What would have helped them?

One paragraph for each. No more.

The Quantitative + Qualitative Framework

Most teams treat session replays and analytics as separate worlds. This is a mistake.

The magic happens when you combine them.

Here's how to build a unified analysis framework:

Step 1: Start with the Numbers

Before watching any sessions, gather your key metrics:

Conversion rate by page

Time on page

Bounce rate

Error rates

Support ticket volume

Watch for sudden changes, unusual patterns, demographic differences, and device-specific issues.

These become your investigation triggers.

Step 2: Use Data to Create Your Watch Lists

For each metric that matters, create two session lists:

Success Cases:

Top 10% in conversion

Fastest completions

Smoothest paths

Failure Cases:

Bottom 10% in conversion

Abandonment points

Error encounters

This gives you contrast. You're not just seeing what's wrong - you're seeing what "good" looks like.

Then go watch these sessions.

Bonus - Build Your Dashboard

Consider creating a live dashboard combining:

Quantitative Signals:

Real-time conversion rates

Error frequencies

Support ticket trends

Qualitative Markers:

Session replay queue length

Identified friction points

Pattern frequencies

With a dashboard like this, you’re primed to come up with the right features to actually solve the problems your customers face.

(But it’s optional.)

3. Building Your Session Replay Practice

Session replays aren't just a tool - they're a practice. Like any practice, they need structure to deliver value.

The Weekly Rhythm

The most successful teams build session watching into their weekly workflow. Not as a special event, but as a regular practice.

Option 1 - “Three a weeks”

If you’re going all in, consider this pattern:

Start-of-week orientation: Monday mornings, 30 minutes. Watch last week's critical sessions. Understand what changed. Spot emerging issues before they become problems.

Mid-week deep dives: Wednesday workshops, 45 minutes. Bring the whole product team. Focus on one specific flow or feature. Build shared understanding.

End-week reflection: Friday afternoon, 15 minutes. Review the week's most interesting sessions. Document learnings. Plan next week's focus.

My preferred style for the Morning meeting is:

Opening (5 mins)

- Key metrics review

- Last week's patterns

- This week's focus

Session Reviews (15 mins)

- 3 key sessions maximum

- One success case

- Two struggle cases

Action Planning (10 mins)

- Pattern documentation

- Task assignment

- Next week's focusOption 2 - The Weekly Meeting

But if you’re not ready, consider a single weekly meeting.

This is what Andrew Capland (2x Head of Growth) did with his teams - “Fullstory Fridays”:

Just Do It!

Whether you do 3 times a week, weekly, or monthly, the point is: make it a regular practice.

For instance, you could also just put it in the Product Trio sync. Just make it work for you.

Cross-Team Collaboration

Session replays bridge the gap between teams. They create shared reality.

Developers see the impact of that edge case they thought wouldn't matter. Designers watch their carefully crafted flow fall apart in real use. Product managers understand why their metrics moved.

But this only works if you make it work.

Making It Stick

Most session replay initiatives fail within three months. They become another forgotten tool in the product development toolkit.

The successful ones share common patterns:

1. Start Small

Don't try to watch everything. Pick one critical flow. Master it. Only then expand.

At Affirm, we started with just the checkout flow. Nothing else mattered until we understood that completely.

2. Build the Habit

Schedule it. Make it routine. Treat it like any other critical meeting.

The best sessions are often the shortest - 15 minutes of focused observation beats hours of aimless watching.

3. Share the Load

Rotate session watching responsibility through the team. Fresh eyes see different things.

But maintain continuity. Each week's watcher briefs the next. Patterns emerge across observers.

4. Document Everything

Create a simple template:

What were we looking for?

What did we see?

What surprised us?

What should we do about it?

Keep it in your team wiki. Make it searchable.

Let the insights compound.

4. Common Pitfalls

Even experienced teams fall into common traps:

Confirmation Bias

Statistical Significance

The Solution Jumping

Poor Implementation

Let me explain.

Mistake 1 - The Confirmation Bias Trap

We see what we want to see. Every product person has pet theories about their users.

Session replays can become a dangerous tool for confirmation bias. We watch until we see what we expected to see.

The fix: Watch with others. Different perspectives break our preconceptions.

Mistake 2 - The Statistical Significance Fallacy

"But it's just one user!"

Yes, and no.

Session replays aren't about statistical significance. They're about understanding depth.

One user struggling for 10 minutes teaches you more than 1000 users bouncing without a trace.

Mistake 3 - The Solution Jump

The hardest skill: watching without solving.

Product people are problem solvers. We see an issue, we want to fix it.

But premature solutions blind us to deeper patterns.

Force yourself to watch three more sessions before proposing any solution.

The real problem is rarely the first one you see.

Mistake 4 - Poor Implementation

The technical details matter. Get these right.

In particular, remember to capture all of:

Mouse movements

Scroll position

Form interactions

Error states

A tool should take care of this for you, but make sure it’s configured correctly!

Many people miss out on some key element.

And finally…. What does the future look like for session replays and PMs?

5. The Future of Product Management

Session replays are just the beginning.

The future of product management requires PMs to extract insights via:

Quantitative breadth (analytics)

Qualitative depth (session replays)

Direct interaction (user research)

Predictive modeling (AI)

But that future only works if we master each piece.

Session replays bridge the gap between numbers and understanding. They show us reality, unfiltered.

Use them wisely. Build your practice. Watch with purpose.

Your users are trying to tell you something.

Sponsored by LogRocket:

LogRocket helps companies like Appfire, Jasper, and Ramp proactively identify opportunities to improve their digital experience. Over 3,000 customers trust LogRocket to boost critical growth metrics like engagement, conversion, and adoption.

Final Thoughts

The reality is: tools like session replay are only as good as your practice.

These frameworks aren't theoretical. They're battle-tested. I've used them at multiple companies, refined them with dozens of teams, and seen them drive real results.

The key is starting small, building the habit, and gradually expanding your practice.

Remember: Every frustrated user is trying to tell you something.

These tools and frameworks help you listen better.

Now you have everything you need to build a world-class session replay practice.

The question is: What will you learn when you start watching closely?

To remove ads, you can go paid:

Hello India! 🇮🇳

Shout out to my Product Growth family in India.

I'm visiting from 16th November to 23rd November:

Delhi: 11/16-11/19

Bangalore: 11/19-11/22

Mumbai: 11/23

Check out this page for all the event details.

Looking forward to see you all!

Up Next

I hope you enjoyed our latest podcast with Shubham Khoker. Up next we have Henry Schuck, Vineeth Madhusudanan, and Aaron Dinin.

And I hope you enjoyed the last newsletter piece on How to Rock the Team Matching Process. After that, we have:

How Sprig Grows

The Product Strategy Interview Revisited

Storytelling for PMs (with collaborator Roshan Gupta)

I can’t wait to share it all with you!

Aakash

P.S. You can earn free months of the paid edition of Product Growth by referring friends (tip: make a post on social media about value you found and use this link):

P.S.2. Alternatively, you can ask your employer: