Everything you need to know about AI (for PMs and builders)

The first principles of AI so that you can transform your products with new AI applications

There’s no shortage of information on AI. But most of it jumps past the basics and goes into some specific news topic.

I wanted to create a foundational resource for smart builders. To answer:

What are the first principles of AI?

How do the various models work, and what makes them AI?

Who is set to accrete value, and who is set to lose it?

This is the resource for builders who want to go from a 6th grade understanding (mainstream media) to a 12th grade understanding in an afternoon.

It’s the basic building blocks to bring a first principles approach to AI in your product.

A big shout-out to Chamath Palihapitiya for his Deep Dive on Artificial Intelligence, which inspired me to write this piece.

Today’s Post

Words: 5,111 | Estimated Reading Time: 24 minutes

A Selected Primer on AI

With deep dives on 4 Key Topics:

Electrical Circuitry

Computer Logic

Neural Networks

LLMs

The taxonomy of AI models

The universe of AI companies

Expected applications by industry

The timelines for future AGI and ASI

At the end of each section, I include links to videos and deep dives to uncover the next layer of each onion.

A Selected Primer on AI

The story of AI begins with trying to replicate the human brain.

It begins with Alan Turing in 1950

In 1950, Alan Turing - subject of 2014 Hollywood Biopic The Imitation Game - published, “Computing Machinery and Intelligence,” where he proposed a test:

The test involves two humans and a machine. The human questioner asks the same questions of a human and a computer respondent, without knowing which is which.

Then, the human rates who is a computer and who a machine.

AI was said to “pass” the test when it could answer in a way that was indistinguishable from humans.

This set off interest in the human brain

To be able to pass the Turing test interested in researchers in the idea of being able to build a computer like the human brain.

This begs the question: how does the human brain work?

There are a number of important processes at play:

Visual stimuli enters our eyes and ears - let’s say a human face talking - which are converted into electrical signals

Signals travel to our brain, which interprets these as a series of sounds or images

Neurons inside our brain communicate with each other in various departments and activate together, enabling the brain to interpret the inputs as a human speaking specific words

And it’s really that third area, of what happens inside the brain that AI is concerned with (although all steps are interesting).

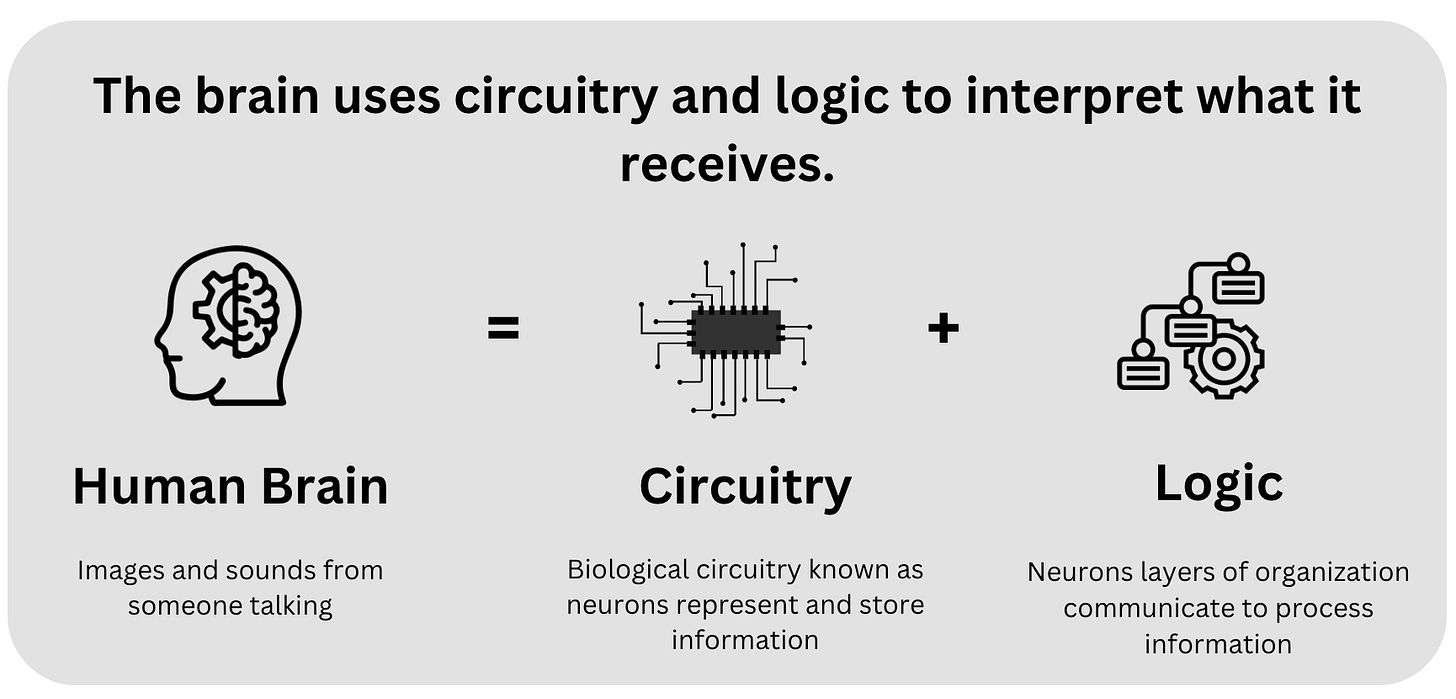

Brain = Circuitry + Logic

Fundamentally, the brain is a combination of biological circuitry and logic that can process large amounts of information in real-time.

AI research has attempted to replicate that in the form of electrical circuitry and neural networks.

And so those two components will be the first 2 of our next 5 key concepts. After that, we’ll go deeper down neural networks, through transformers, and finally, LLMs.

Additional Information

YouTube:

Turing Test: Can Machines Think? by Lex Fridman

The Rise of Artificial Intelligence by Brandon Lisy and Ashlee Vance

Reading:

Of Circuits and Brains by Martinez and Sprecher

Do neural networks really work like neurons? by Yariv Adan

Key Topic #1: Electrical Circuitry

If the brain is circuitry plus logic, then humans must master electrical circuitry.

And if you’ve seen a stock chart for Nvidia lately, then you know there’s some pretty amazing things we have done.

Let’s work our way towards the GPUs from the basics.

Transistors

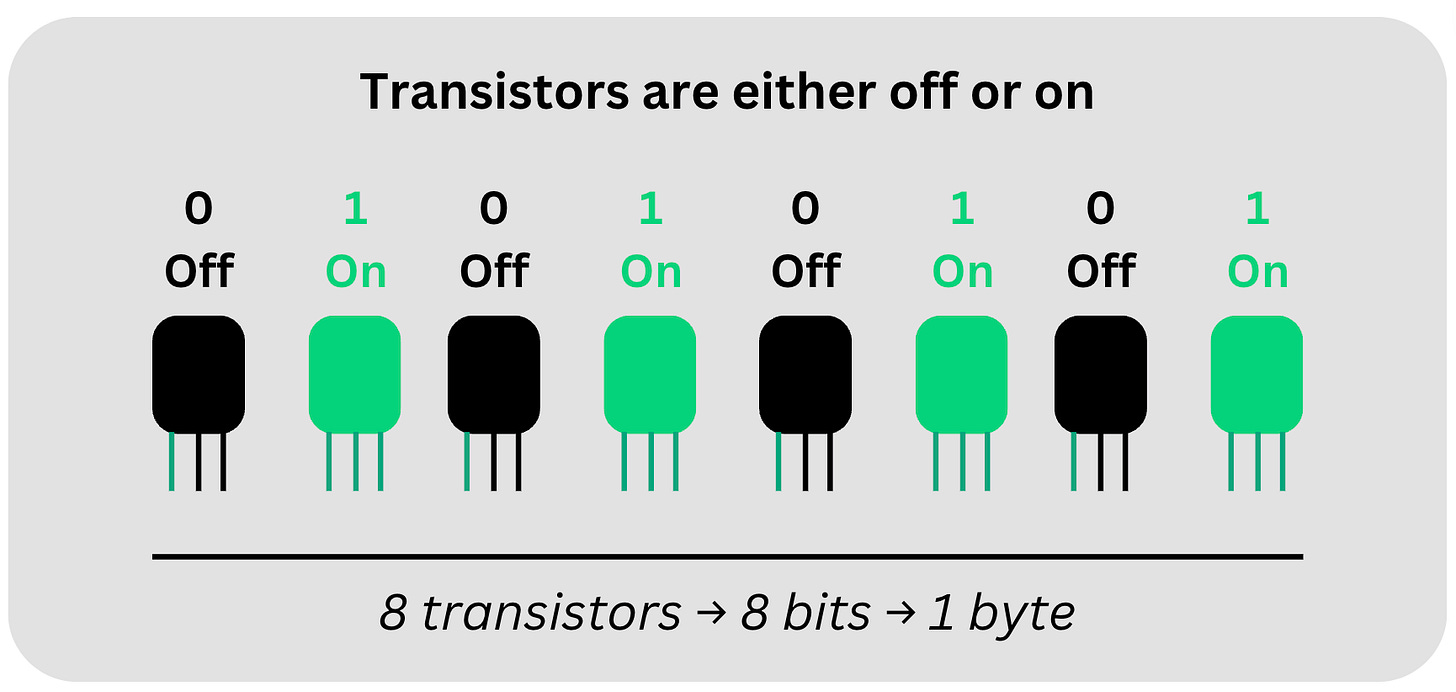

The building block of computers is the transistor. Transistors are semiconductor devices that use electrical signals to have one of two states: off and on.

In other words, the native language of computers is binary.

They switch off and on by applying a voltage.

8 transistors put together make a byte. Those are the bytes you hear about:

Kilobytes are 1 thousand

Megabytes are 1 million

Gigabytes are 1 billion

Cores

Many transistors are organized into a single core.

Even last generation cores using 7nm technology still pack billions of transistors into 1 to 2 millimeters squared, to create a single core.

A single core processes tasks sequentially. So, these days, we have processors which are organized into multiple cores that execute tasks in parallel.

These are the typical CPUs that ship in laptops and desktops.

For instance, these are the stats for Apple’s latest M3 Max. 92 billion transistors across three sets of chips:

16-core CPU

40-core GPU

16-core Neural Engine

Specialized Processing Units

What’s the difference between the CPU, GPU, and neural engine? These are specialized processing units.

The CPU is the brain of the computer and excels in low-latency processing

GPUs were originally designed for 3D graphics, but their architecture of more efficient cores in parallel have become well-suited for AI workloads

The neural engine is even further designed for the millions of parallel calculations demanded by today’s neural networks

That’s just Apple Silicon.

Nvidia

The demand for cores never ends, especially for high end models like GPT-4.

GPT-4 sits atop NVIDIA’s CUDA architecture that uses thousands of expensive H100s.

H100s are specialized packages of GPUs which offer 80 billion transistors across:

112 CPU cores

528 tensor cores

16,896 CUDA cores

But, for that, you’ll have to pay $73K+ and join the waitlist.

Google

Beyond Apple and Nvidia, one other type of specialized processor has become popular in AI discussions: TPUs.

Created by Google, these are Tensor Processing Units which specialized in dense matrix operations using TensorFlow.

All these advances in circuitry have enabled vast advances in logic to get closer to how the human brain works, which is our next key topic.

Additional Information

YouTube:

How Nvidia Grew From Gaming To A.I. Giant, Now Powering ChatGPT by CNBC

Why Brain-like Computers Are Hard by The Asianometry Newsletter

Reading:

The AI Chip Revolution by Doo Prime

Key Topic #2: Computer Logic

With all these improvements in electrical circuitry, we have pushed the limits of computer logic:

It begins with pattern recognition

But before we can build our way up to the deep neural networks of today, we need to start with how computer logic systems work:

Keep reading with a 7-day free trial

Subscribe to Product Growth to keep reading this post and get 7 days of free access to the full post archives.